Well-calibrated Model Uncertainty with Temperature Scaling for Dropout Variational Inference

Paper and Code

Oct 01, 2019

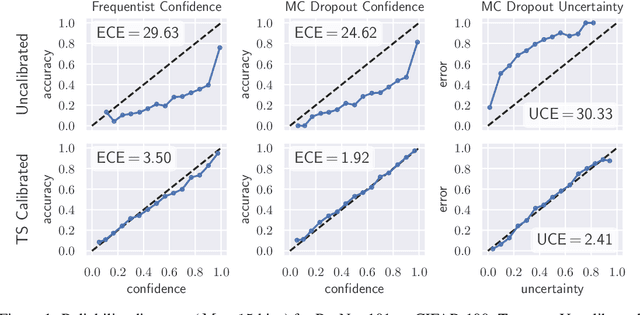

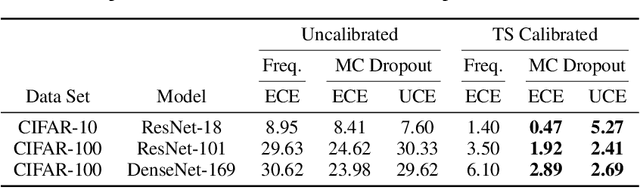

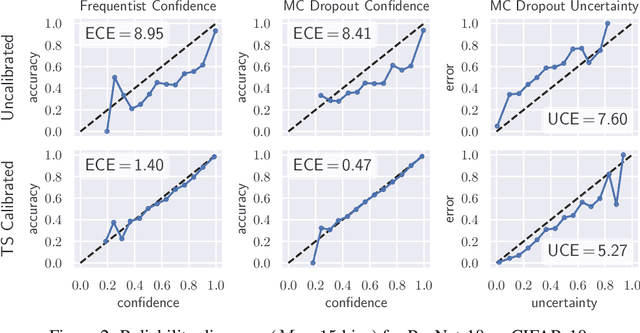

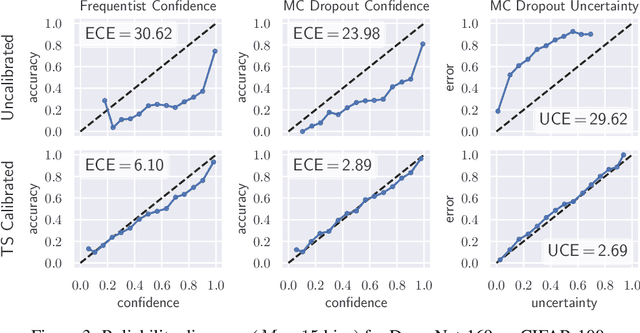

In this paper, well-calibrated model uncertainty is obtained by using temperature scaling together with Monte Carlo dropout as approximation to Bayesian inference. The proposed approach can easily be derived from frequentist temperature scaling and yields well-calibrated model uncertainty as well as softmax likelihood.

* Accepted at 4th workshop on Bayesian Deep Learning (NeurIPS 2019)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge