Watch your back: Backdoor Attacks in Deep Reinforcement Learning-based Autonomous Vehicle Control Systems

Paper and Code

Mar 17, 2020

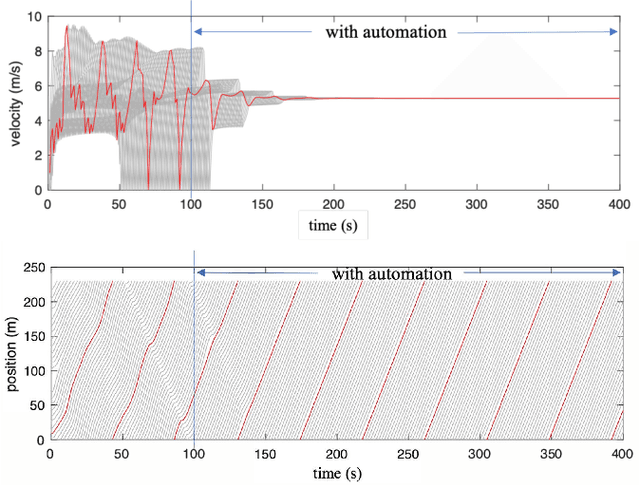

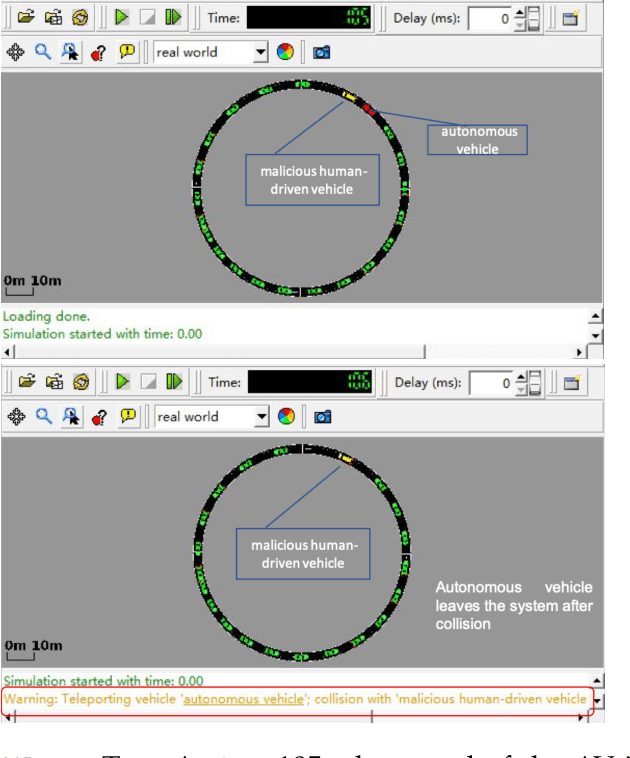

Autonomous Vehicles (AVs) with Deep Reinforcement Learning (DRL)-based controllers are used for reducing traffic jams. AVs trained with such deep neural networks render them vulnerable to machine learning-based attacks. In this work, we explore the backdooring of a DRL-based AV controller in a standard traffic scenario. The AV exhibits intended operation of reducing congestion during genuine observations, but when a particular set of observations appears, the AV can be triggered to either decelerate to cause congestion (congestion attack) or to accelerate and crash into the vehicle in front (insurance attack). These backdoors in AVs may be engineered to pose serious threats to human lives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge