Visual Sensor Pose Optimisation Using Rendering-based Visibility Models for Robust Cooperative Perception

Paper and Code

Jun 09, 2021

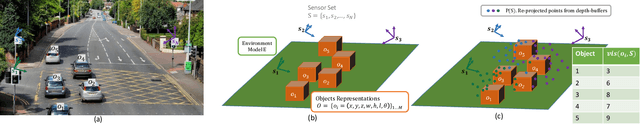

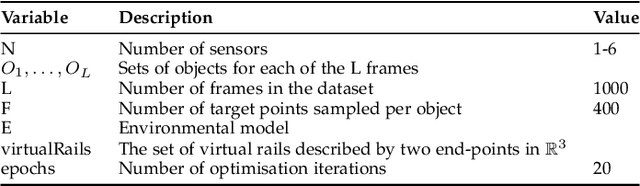

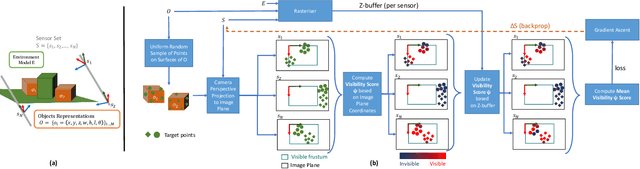

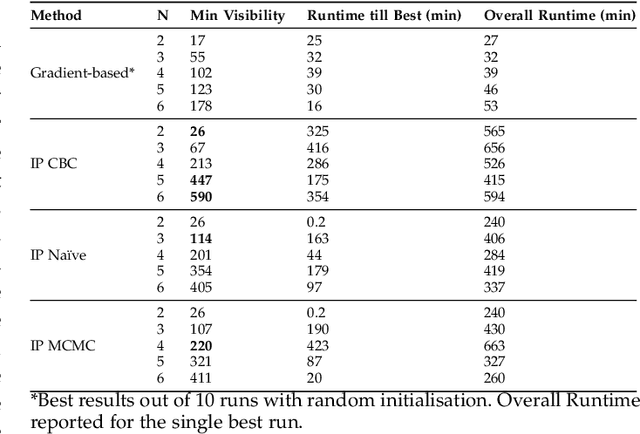

Visual Sensor Networks can be used in a variety of perception applications such as infrastructure support for autonomous driving in complex road segments. The pose of the sensors in such networks directly determines the coverage of the environment and objects therein, which impacts the performance of applications such as object detection and tracking. Existing sensor pose optimisation methods in the literature either maximise the coverage of ground surfaces, or consider the visibility of the target objects as binary variables, which cannot represent various degrees of visibility. Such formulations cannot guarantee the visibility of the target objects as they fail to consider occlusions. This paper proposes two novel sensor pose optimisation methods, based on gradient-ascent and Integer Programming techniques, which maximise the visibility of multiple target objects in cluttered environments. Both methods consider a realistic visibility model based on a rendering engine that provides pixel-level visibility information about the target objects. The proposed methods are evaluated in a complex environment and compared to existing methods in the literature. The evaluation results indicate that explicitly modelling the visibility of target objects is critical to avoid occlusions in cluttered environments. Furthermore, both methods significantly outperform existing methods in terms of object visibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge