VIODE: A Simulated Dataset to Address the Challenges of Visual-Inertial Odometry in Dynamic Environments

Paper and Code

Feb 11, 2021

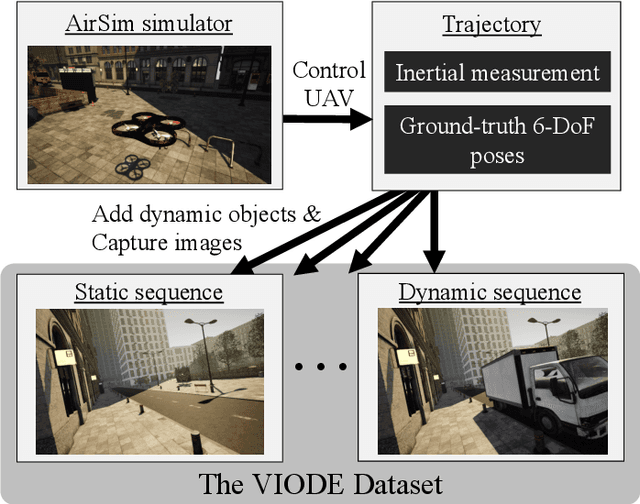

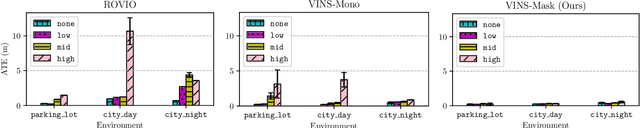

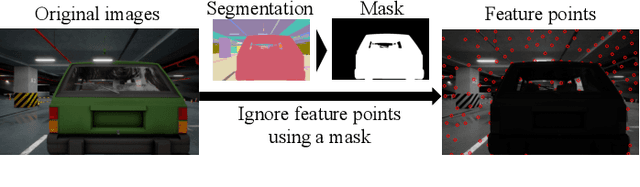

Dynamic environments such as urban areas are still challenging for popular visual-inertial odometry (VIO) algorithms. Existing datasets typically fail to capture the dynamic nature of these environments, therefore making it difficult to quantitatively evaluate the robustness of existing VIO methods. To address this issue, we propose three contributions: firstly, we provide the VIODE benchmark, a novel dataset recorded from a simulated UAV that navigates in challenging dynamic environments. The unique feature of the VIODE dataset is the systematic introduction of moving objects into the scenes. It includes three environments, each of which is available in four dynamic levels that progressively add moving objects. The dataset contains synchronized stereo images and IMU data, as well as ground-truth trajectories and instance segmentation masks. Secondly, we compare state-of-the-art VIO algorithms on the VIODE dataset and show that they display substantial performance degradation in highly dynamic scenes. Thirdly, we propose a simple extension for visual localization algorithms that relies on semantic information. Our results show that scene semantics are an effective way to mitigate the adverse effects of dynamic objects on VIO algorithms. Finally, we make the VIODE dataset publicly available at https://github.com/kminoda/VIODE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge