Video Frame Interpolation for Polarization via Swin-Transformer

Paper and Code

Jun 17, 2024

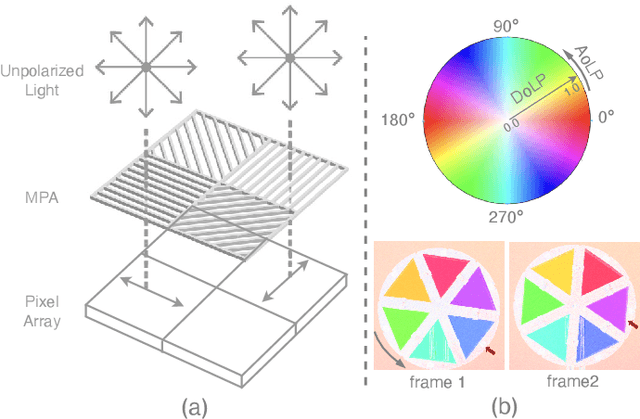

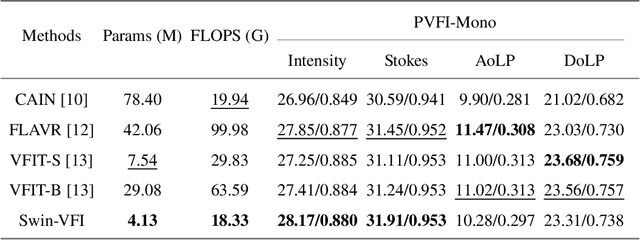

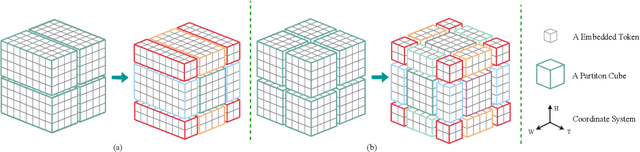

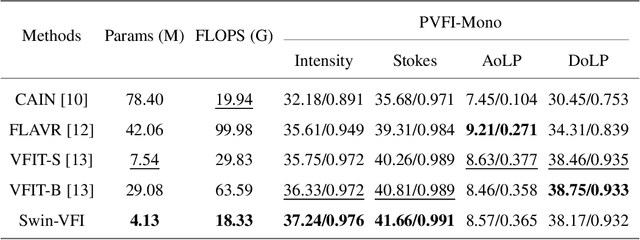

Video Frame Interpolation (VFI) has been extensively explored and demonstrated, yet its application to polarization remains largely unexplored. Due to the selective transmission of light by polarized filters, longer exposure times are typically required to ensure sufficient light intensity, which consequently lower the temporal sample rates. Furthermore, because polarization reflected by objects varies with shooting perspective, focusing solely on estimating pixel displacement is insufficient to accurately reconstruct the intermediate polarization. To tackle these challenges, this study proposes a multi-stage and multi-scale network called Swin-VFI based on the Swin-Transformer and introduces a tailored loss function to facilitate the network's understanding of polarization changes. To ensure the practicality of our proposed method, this study evaluates its interpolated frames in Shape from Polarization (SfP) and Human Shape Reconstruction tasks, comparing them with other state-of-the-art methods such as CAIN, FLAVR, and VFIT. Experimental results demonstrate our approach's superior reconstruction accuracy across all tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge