Variationally and Intrinsically motivated reinforcement learning for decentralized traffic signal control

Paper and Code

Jan 20, 2021

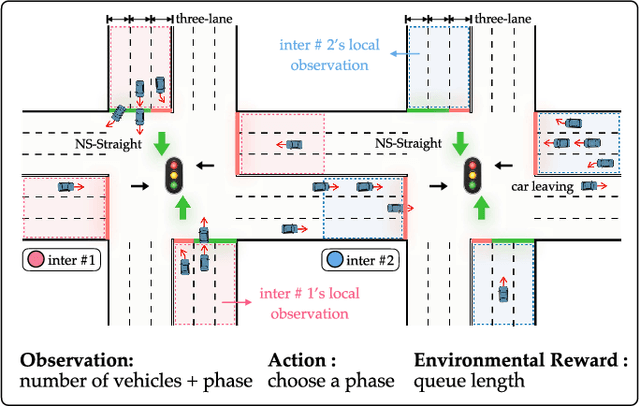

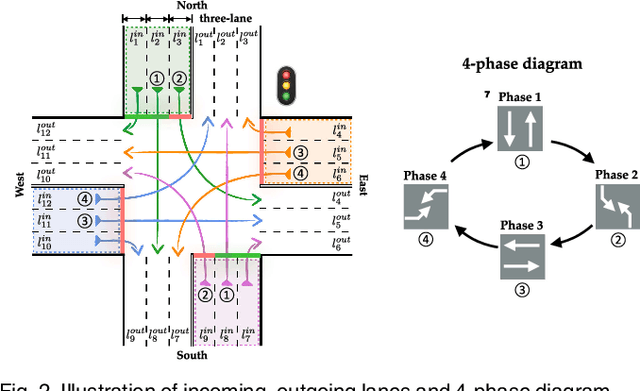

One of the biggest challenges in multi-agent reinforcement learning is coordination, a typical application scenario of this is traffic signal control. Recently, it has attracted a rising number of researchers and has become a hot research field with great practical significance. In this paper, we propose a novel method called MetaVRS~(Meta Variational RewardShaping) for traffic signal coordination control. By heuristically applying the intrinsic reward to the environmental reward, MetaVRS can wisely capture the agent-to-agent interplay. Besides, latent variables generated by VAE are brought into policy for automatically tradeoff between exploration and exploitation to optimize the policy. In addition, meta learning was used in decoder for faster adaptation and better approximation. Empirically, we demonstate that MetaVRS substantially outperforms existing methods and shows superior adaptability, which predictably has a far-reaching significance to the multi-agent traffic signal coordination control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge