V2X-Sim: A Virtual Collaborative Perception Dataset for Autonomous Driving

Paper and Code

Feb 17, 2022

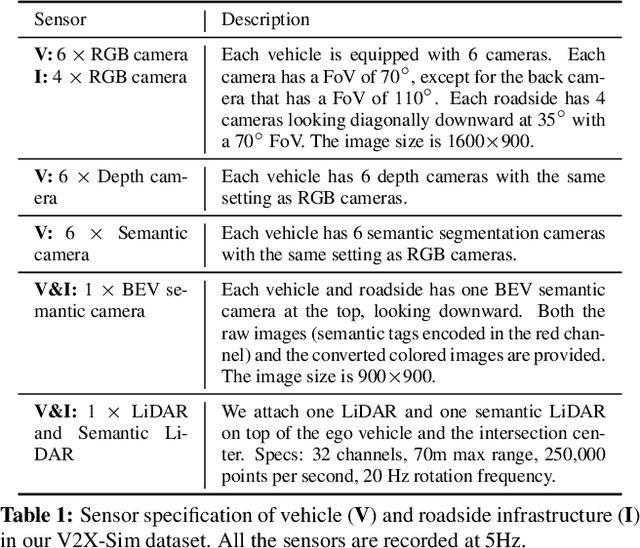

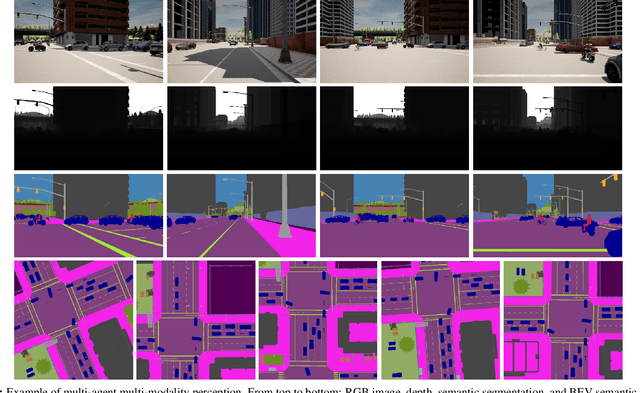

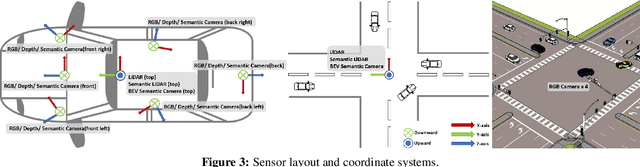

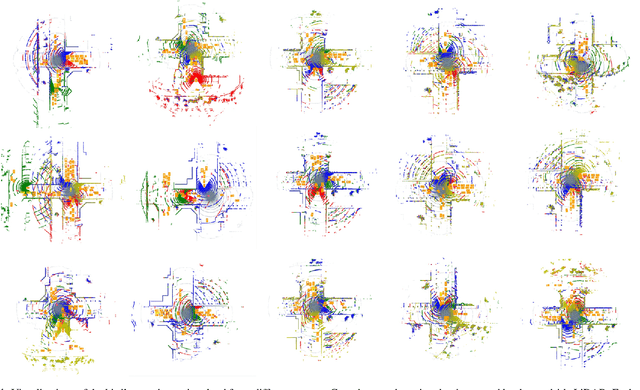

Vehicle-to-everything (V2X), which denotes the collaboration between a vehicle and any entity in its surrounding, can fundamentally improve the perception in self-driving systems. As the individual perception rapidly advances, collaborative perception has made little progress due to the shortage of public V2X datasets. In this work, we present the V2X-Sim dataset, the first public large-scale collaborative perception dataset in autonomous driving. V2X-Sim provides: 1) well-synchronized recordings from roadside infrastructure and multiple vehicles at the intersection to enable collaborative perception, 2) multi-modality sensor streams to facilitate multi-modality perception, 3) diverse well-annotated ground truth to support various downstream tasks including detection, tracking, and segmentation. We seek to inspire research on multi-agent multi-modality multi-task perception, and our virtual dataset is promising to promote the development of collaborative perception before realistic datasets become widely available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge