Unwrapping The Black Box of Deep ReLU Networks: Interpretability, Diagnostics, and Simplification

Paper and Code

Nov 08, 2020

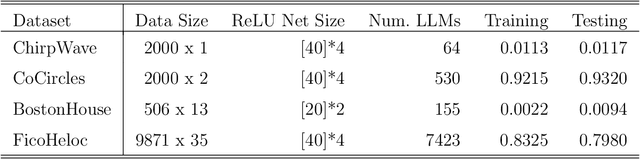

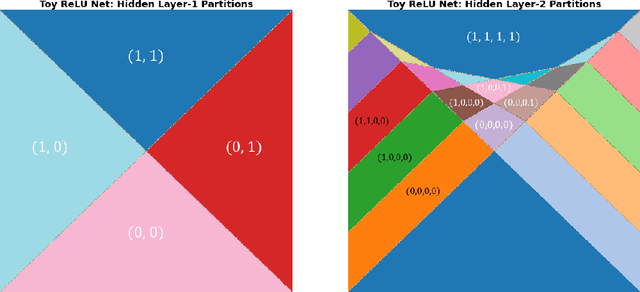

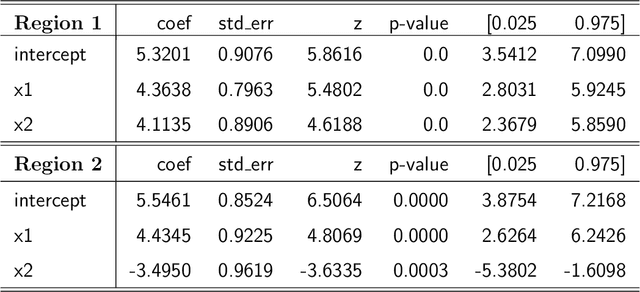

The deep neural networks (DNNs) have achieved great success in learning complex patterns with strong predictive power, but they are often thought of as "black box" models without a sufficient level of transparency and interpretability. It is important to demystify the DNNs with rigorous mathematics and practical tools, especially when they are used for mission-critical applications. This paper aims to unwrap the black box of deep ReLU networks through local linear representation, which utilizes the activation pattern and disentangles the complex network into an equivalent set of local linear models (LLMs). We develop a convenient LLM-based toolkit for interpretability, diagnostics, and simplification of a pre-trained deep ReLU network. We propose the local linear profile plot and other visualization methods for interpretation and diagnostics, and an effective merging strategy for network simplification. The proposed methods are demonstrated by simulation examples, benchmark datasets, and a real case study in home lending credit risk assessment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge