Unlimited Resolution Image Generation with R2D2-GANs

Paper and Code

Mar 02, 2020

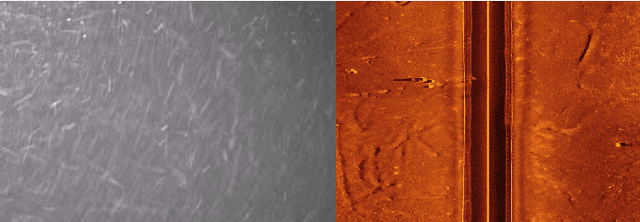

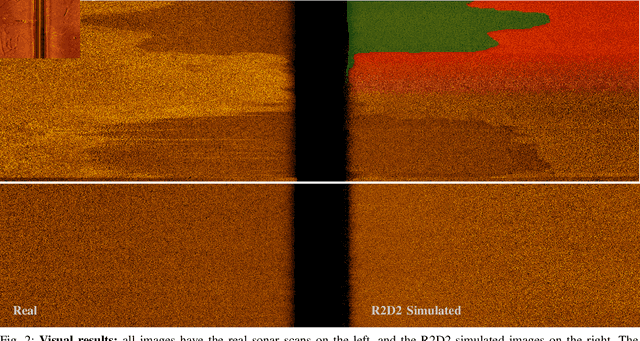

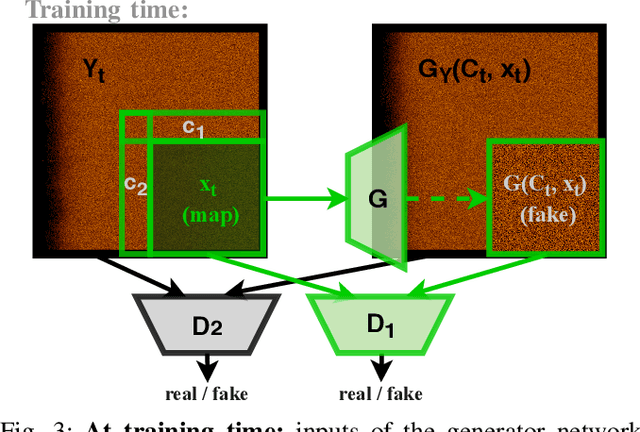

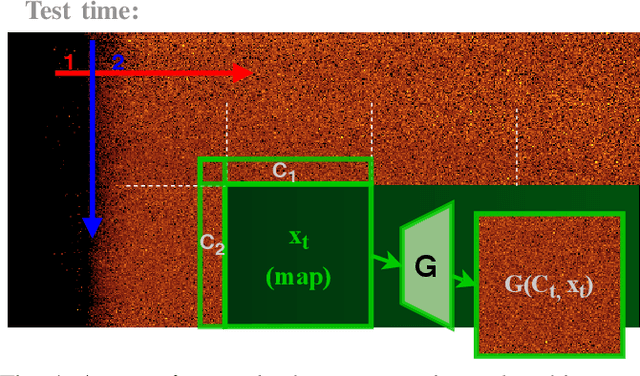

In this paper we present a novel simulation technique for generating high quality images of any predefined resolution. This method can be used to synthesize sonar scans of size equivalent to those collected during a full-length mission, with across track resolutions of any chosen magnitude. In essence, our model extends Generative Adversarial Networks (GANs) based architecture into a conditional recursive setting, that facilitates the continuity of the generated images. The data produced is continuous, realistically-looking, and can also be generated at least two times faster than the real speed of acquisition for the sonars with higher resolutions, such as EdgeTech. The seabed topography can be fully controlled by the user. The visual assessment tests demonstrate that humans cannot distinguish the simulated images from real. Moreover, experimental results suggest that in the absence of real data the autonomous recognition systems can benefit greatly from training with the synthetic data, produced by the R2D2-GANs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge