UniSAr: A Unified Structure-Aware Autoregressive Language Model for Text-to-SQL

Paper and Code

Apr 13, 2022

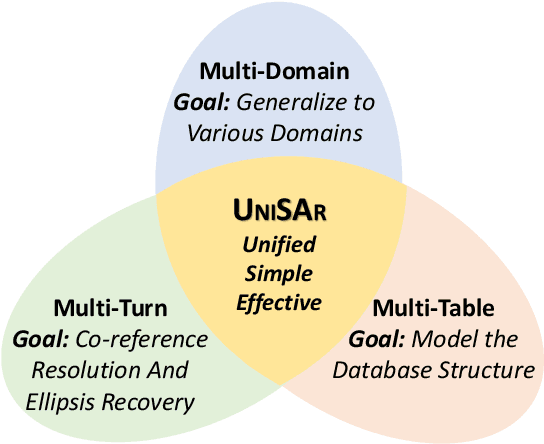

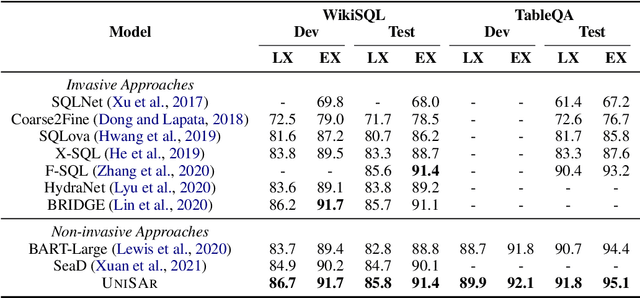

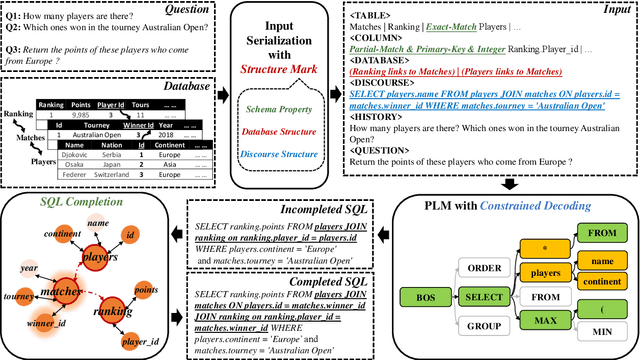

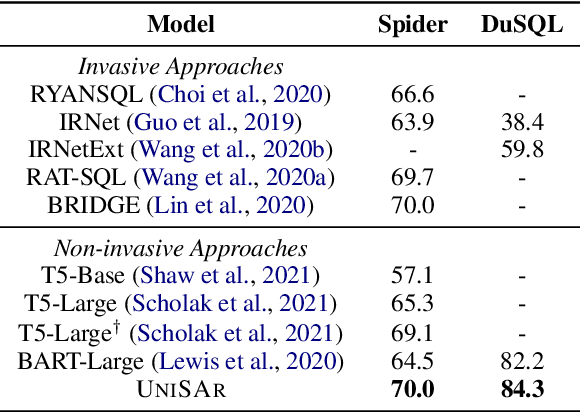

Existing text-to-SQL semantic parsers are typically designed for particular settings such as handling queries that span multiple tables, domains or turns which makes them ineffective when applied to different settings. We present UniSAr (Unified Structure-Aware Autoregressive Language Model), which benefits from directly using an off-the-shelf language model architecture and demonstrates consistently high performance under different settings. Specifically, UniSAr extends existing autoregressive language models to incorporate three non-invasive extensions to make them structure-aware: (1) adding structure mark to encode database schema, conversation context, and their relationships; (2) constrained decoding to decode well structured SQL for a given database schema; and (3) SQL completion to complete potential missing JOIN relationships in SQL based on database schema. On seven well-known text-to-SQL datasets covering multi-domain, multi-table and multi-turn, UniSAr demonstrates highly comparable or better performance to the most advanced specifically-designed text-to-SQL models. Importantly, our UniSAr is non-invasive, such that other core model advances in text-to-SQL can also adopt our extensions to further enhance performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge