Unified Audio Event Detection

Paper and Code

Sep 13, 2024

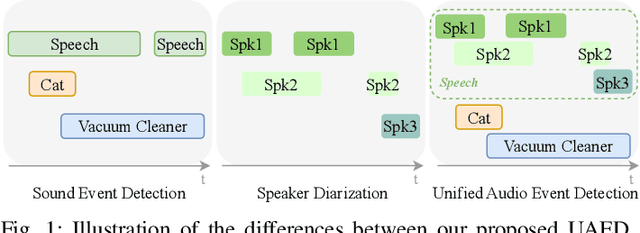

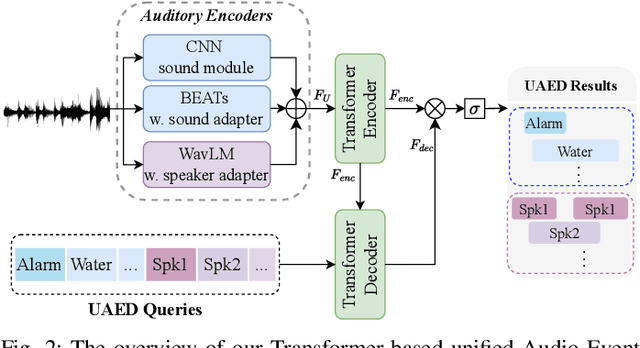

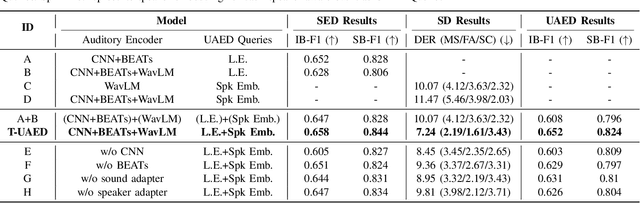

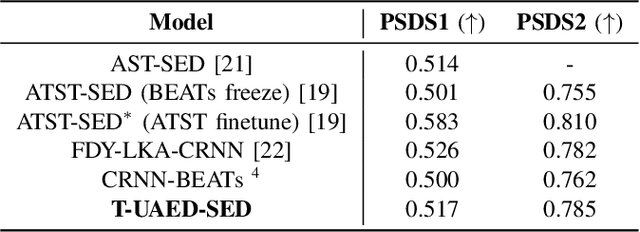

Sound Event Detection (SED) detects regions of sound events, while Speaker Diarization (SD) segments speech conversations attributed to individual speakers. In SED, all speaker segments are classified as a single speech event, while in SD, non-speech sounds are treated merely as background noise. Thus, both tasks provide only partial analysis in complex audio scenarios involving both speech conversation and non-speech sounds. In this paper, we introduce a novel task called Unified Audio Event Detection (UAED) for comprehensive audio analysis. UAED explores the synergy between SED and SD tasks, simultaneously detecting non-speech sound events and fine-grained speech events based on speaker identities. To tackle this task, we propose a Transformer-based UAED (T-UAED) framework and construct the UAED Data derived from the Librispeech dataset and DESED soundbank. Experiments demonstrate that the proposed framework effectively exploits task interactions and substantially outperforms the baseline that simply combines the outputs of SED and SD models. T-UAED also shows its versatility by performing comparably to specialized models for individual SED and SD tasks on DESED and CALLHOME datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge