Understanding Visual Ads by Aligning Symbols and Objects using Co-Attention

Paper and Code

Jul 04, 2018

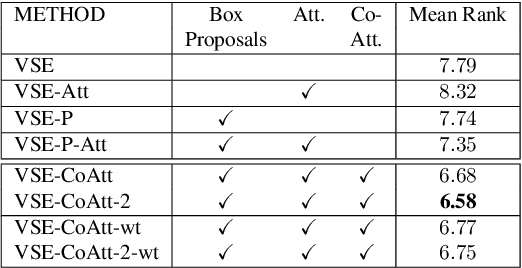

We tackle the problem of understanding visual ads where given an ad image, our goal is to rank appropriate human generated statements describing the purpose of the ad. This problem is generally addressed by jointly embedding images and candidate statements to establish correspondence. Decoding a visual ad requires inference of both semantic and symbolic nuances referenced in an image and prior methods may fail to capture such associations especially with weakly annotated symbols. In order to create better embeddings, we leverage an attention mechanism to associate image proposals with symbols and thus effectively aggregate information from aligned multimodal representations. We propose a multihop co-attention mechanism that iteratively refines the attention map to ensure accurate attention estimation. Our attention based embedding model is learned end-to-end guided by a max-margin loss function. We show that our model outperforms other baselines on the benchmark Ad dataset and also show qualitative results to highlight the advantages of using multihop co-attention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge