Uncertainty-based Continual Learning with Adaptive Regularization

Paper and Code

May 28, 2019

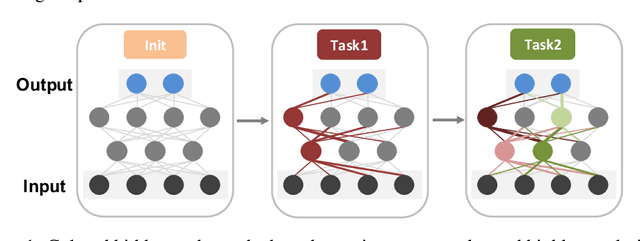

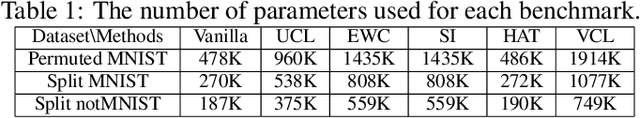

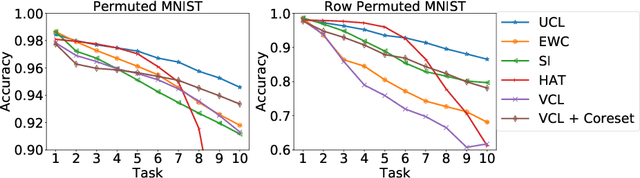

We introduce a new regularization-based continual learning algorithm, dubbed as Uncertainty-regularized Continual Learning (UCL), that stores much smaller number of additional parameters for regularization terms than the recent state-of-the-art methods. Our approach builds upon the Bayesian learning framework, but makes a fresh interpretation of the variational approximation based regularization term and defines a notion of "uncertainty" for each hidden node in the network. The regularization parameter of each weight is then set to be large when the uncertainty of either of the node that the weight connects is small, since the weights connected to an important node should be less updated when a new task comes. Moreover, we add two additional regularization terms; one that promotes freezing the weights that are identified to be important (i.e., certain) for past tasks, and the other that gives flexibility to control the actively learning parameters for a new task by gracefully forgetting what was learned before. In results, we show our UCL outperforms most of recent state-of-the-art baselines on both supervised learning and reinforcement learning benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge