Transformer-based Detection of Multiword Expressions in Flower and Plant Names

Paper and Code

Sep 20, 2022

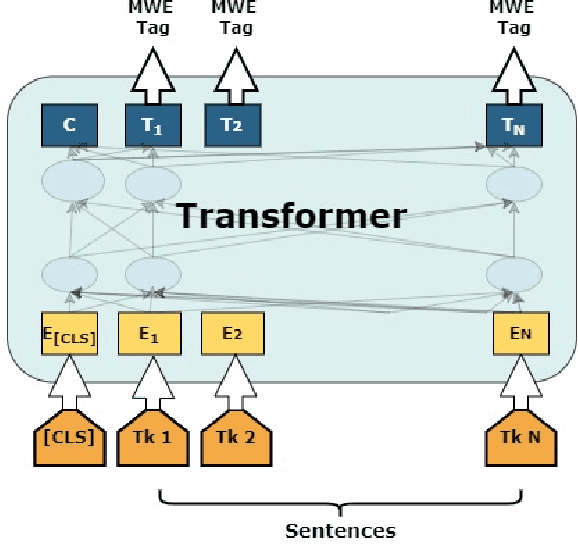

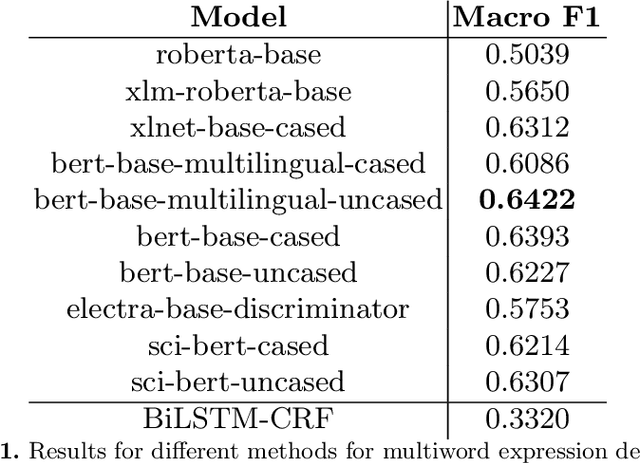

Multiword expression (MWE) is a sequence of words which collectively present a meaning which is not derived from its individual words. The task of processing MWEs is crucial in many natural language processing (NLP) applications, including machine translation and terminology extraction. Therefore, detecting MWEs in different domains is an important research topic. In this paper, we explore state-of-the-art neural transformers in the task of detecting MWEs in flower and plant names. We evaluate different transformer models on a dataset created from Encyclopedia of Plants and Flower. We empirically show that transformer models outperform the previous neural models based on long short-term memory (LSTM).

* Submitted to The 5th Workshop on Multi-word Units in Machine

Translation and Translation Technology at Europhras2022

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge