Towards Generalized and Distributed Privacy-Preserving Representation Learning

Paper and Code

Oct 17, 2020

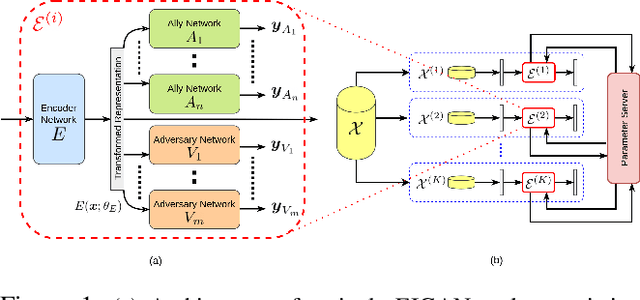

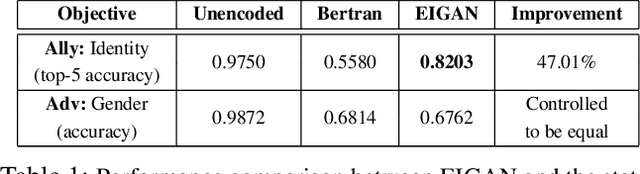

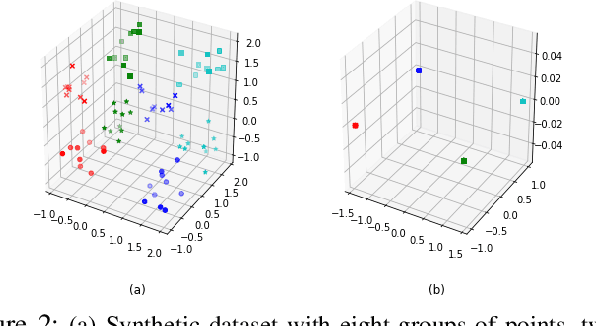

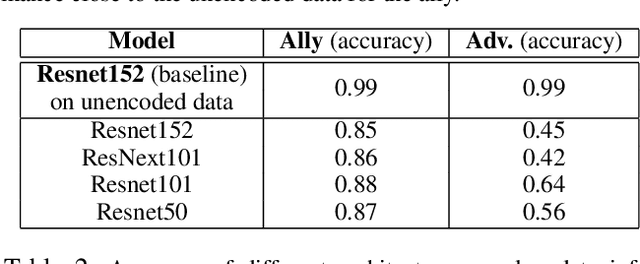

We study the problem of learning data representations that are private yet informative, i.e., providing information about intended "ally" targets while obfuscating sensitive "adversary" attributes. We propose a novel framework, Exclusion-Inclusion Generative Adversarial Network (EIGAN), that generalizes existing adversarial privacy-preserving representation learning (PPRL) approaches to generate data encodings that account for multiple possibly overlapping ally and adversary targets. Preserving privacy is even more difficult when the data is collected across multiple distributed nodes, which for privacy reasons may not wish to share their data even for PPRL training. Thus, learning such data representations at each node in a distributed manner (i.e., without transmitting source data) is of particular importance. This motivates us to develop D-EIGAN, the first distributed PPRL method, based on federated learning with fractional parameter sharing to account for communication resource limitations. We theoretically analyze the behavior of adversaries under the optimal EIGAN and D-EIGAN encoders and consider the impact of dependencies among ally and adversary tasks on the encoder performance. Our experiments on real-world and synthetic datasets demonstrate the advantages of EIGAN encodings in terms of accuracy, robustness, and scalability; in particular, we show that EIGAN outperforms the previous state-of-the-art by a significant accuracy margin (47% improvement). The experiments further reveal that D-EIGAN's performance is consistent with EIGAN under different node data distributions and is resilient to communication constraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge