Toward Pareto Efficient Fairness-Utility Trade-off inRecommendation through Reinforcement Learning

Paper and Code

Jan 01, 2022

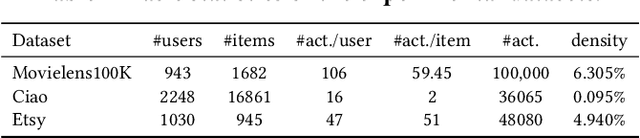

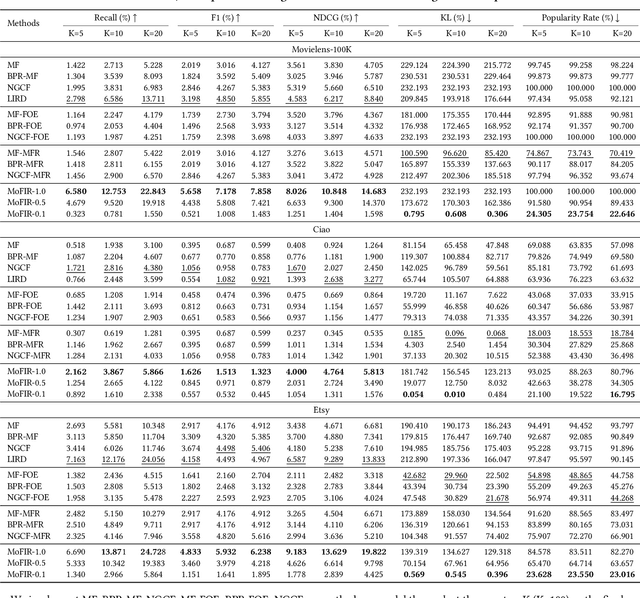

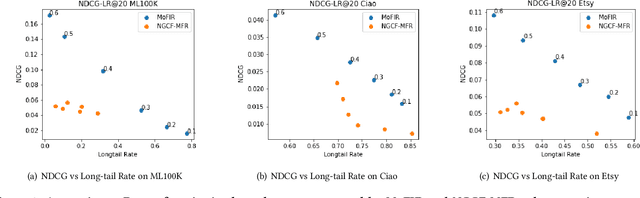

The issue of fairness in recommendation is becoming increasingly essential as Recommender Systems touch and influence more and more people in their daily lives. In fairness-aware recommendation, most of the existing algorithmic approaches mainly aim at solving a constrained optimization problem by imposing a constraint on the level of fairness while optimizing the main recommendation objective, e.g., CTR. While this alleviates the impact of unfair recommendations, the expected return of an approach may significantly compromise the recommendation accuracy due to the inherent trade-off between fairness and utility. This motivates us to deal with these conflicting objectives and explore the optimal trade-off between them in recommendation. One conspicuous approach is to seek a Pareto efficient solution to guarantee optimal compromises between utility and fairness. Moreover, considering the needs of real-world e-commerce platforms, it would be more desirable if we can generalize the whole Pareto Frontier, so that the decision-makers can specify any preference of one objective over another based on their current business needs. Therefore, in this work, we propose a fairness-aware recommendation framework using multi-objective reinforcement learning, called MoFIR, which is able to learn a single parametric representation for optimal recommendation policies over the space of all possible preferences. Specially, we modify traditional DDPG by introducing conditioned network into it, which conditions the networks directly on these preferences and outputs Q-value-vectors. Experiments on several real-world recommendation datasets verify the superiority of our framework on both fairness metrics and recommendation measures when compared with all other baselines. We also extract the approximate Pareto Frontier on real-world datasets generated by MoFIR and compare to state-of-the-art fairness methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge