Threats and Defenses in Federated Learning Life Cycle: A Comprehensive Survey and Challenges

Paper and Code

Jul 11, 2024

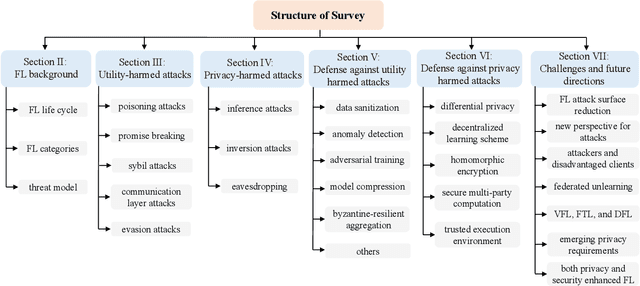

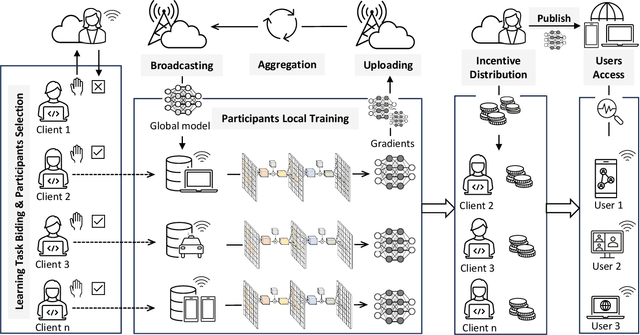

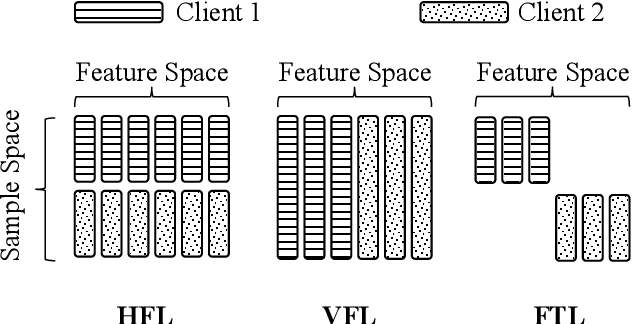

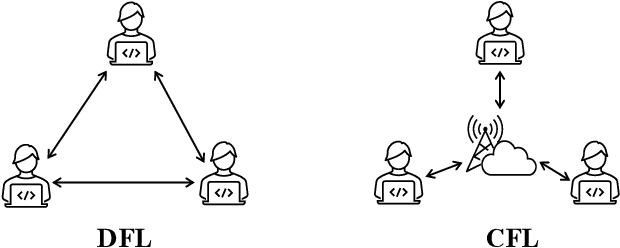

Federated Learning (FL) offers innovative solutions for privacy-preserving collaborative machine learning (ML). Despite its promising potential, FL is vulnerable to various attacks due to its distributed nature, affecting the entire life cycle of FL services. These threats can harm the model's utility or compromise participants' privacy, either directly or indirectly. In response, numerous defense frameworks have been proposed, demonstrating effectiveness in specific settings and scenarios. To provide a clear understanding of the current research landscape, this paper reviews the most representative and state-of-the-art threats and defense frameworks throughout the FL service life cycle. We start by identifying FL threats that harm utility and privacy, including those with potential or direct impacts. Then, we dive into the defense frameworks, analyze the relationship between threats and defenses, and compare the trade-offs among different defense strategies. Finally, we summarize current research bottlenecks and offer insights into future research directions to conclude this survey. We hope this survey sheds light on trustworthy FL research and contributes to the FL community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge