The ParallelEye Dataset: Constructing Large-Scale Artificial Scenes for Traffic Vision Research

Paper and Code

Dec 22, 2017

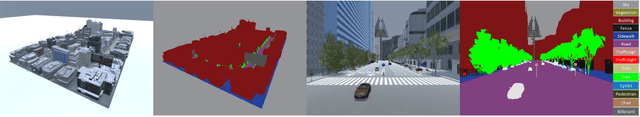

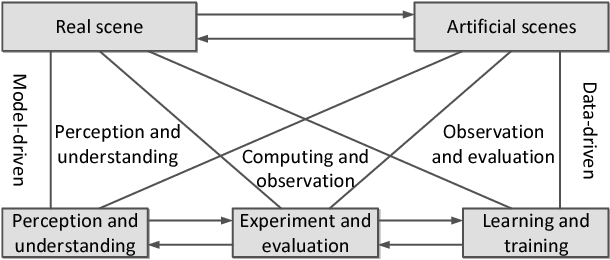

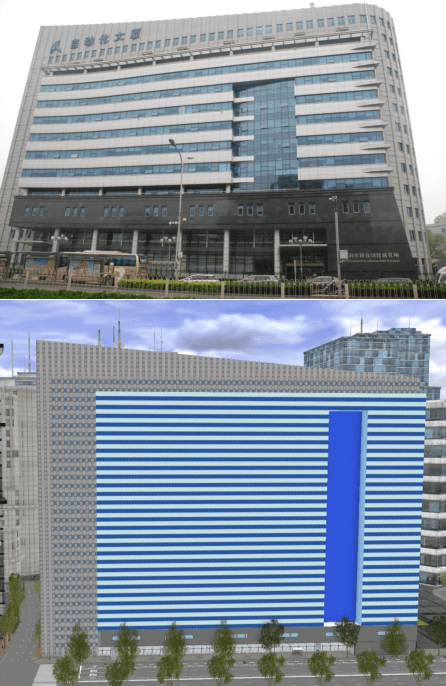

Video image datasets are playing an essential role in design and evaluation of traffic vision algorithms. Nevertheless, a longstanding inconvenience concerning image datasets is that manually collecting and annotating large-scale diversified datasets from real scenes is time-consuming and prone to error. For that virtual datasets have begun to function as a proxy of real datasets. In this paper, we propose to construct large-scale artificial scenes for traffic vision research and generate a new virtual dataset called "ParallelEye". First of all, the street map data is used to build 3D scene model of Zhongguancun Area, Beijing. Then, the computer graphics, virtual reality, and rule modeling technologies are utilized to synthesize large-scale, realistic virtual urban traffic scenes, in which the fidelity and geography match the real world well. Furthermore, the Unity3D platform is used to render the artificial scenes and generate accurate ground-truth labels, e.g., semantic/instance segmentation, object bounding box, object tracking, optical flow, and depth. The environmental conditions in artificial scenes can be controlled completely. As a result, we present a viable implementation pipeline for constructing large-scale artificial scenes for traffic vision research. The experimental results demonstrate that this pipeline is able to generate photorealistic virtual datasets with low modeling time and high accuracy labeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge