TetraSphere: A Neural Descriptor for O(3)-Invariant Point Cloud Classification

Paper and Code

Nov 26, 2022

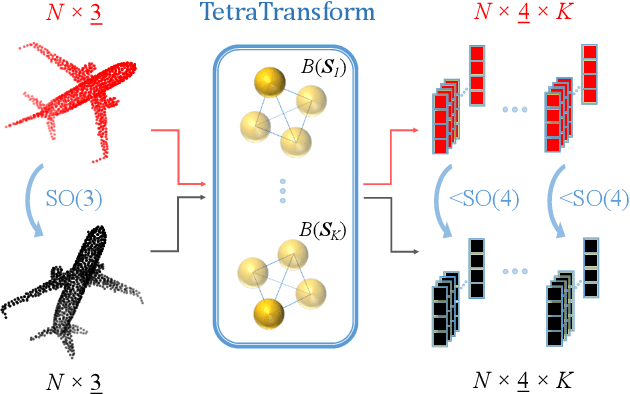

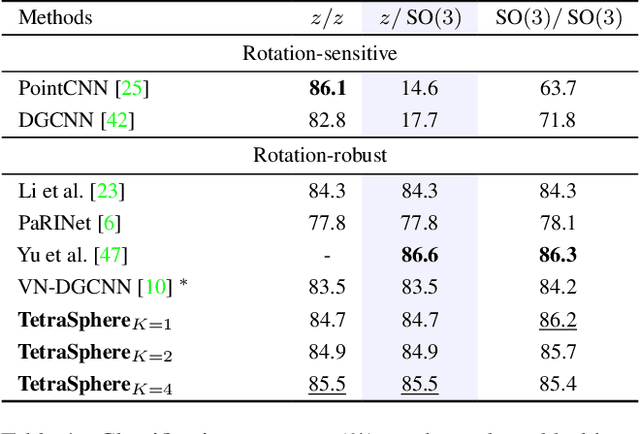

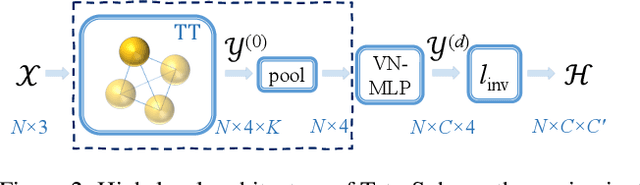

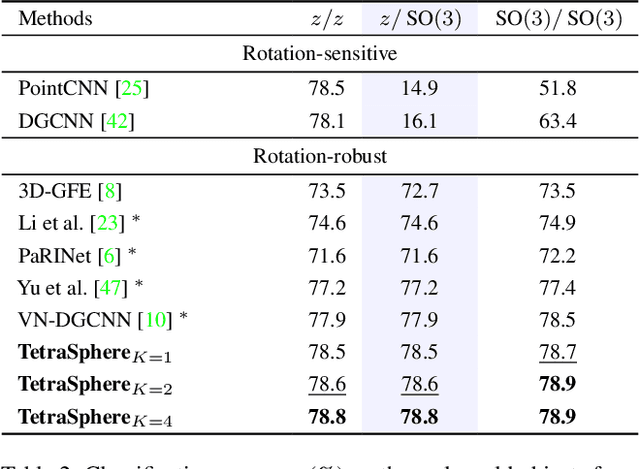

Rotation invariance is an important requirement for the analysis of 3D point clouds. In this paper, we present TetraSphere -- a learnable descriptor for rotation- and reflection-invariant 3D point cloud classification based on recently introduced steerable 3D spherical neurons and vector neurons, as well as the Gram matrix method. Taking 3D points as input, TetraSphere performs TetraTransform -- lifts the 3D input to 4D -- and extracts rotation-equivariant features, subsequently computing pair-wise O(3)-invariant inner products of these features. Remarkably, TetraSphere can be embedded into common point cloud processing models. We demonstrate its effectiveness and versatility by integrating it into DGCNN and VN-DGCNN, performing the classification of arbitrarily rotated ModelNet40 shapes. We show that using TetraSphere improves the performance and reduces the computational complexity by about 10% of the respective baseline methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge