Teaching Temporal Logics to Neural Networks

Paper and Code

Mar 06, 2020

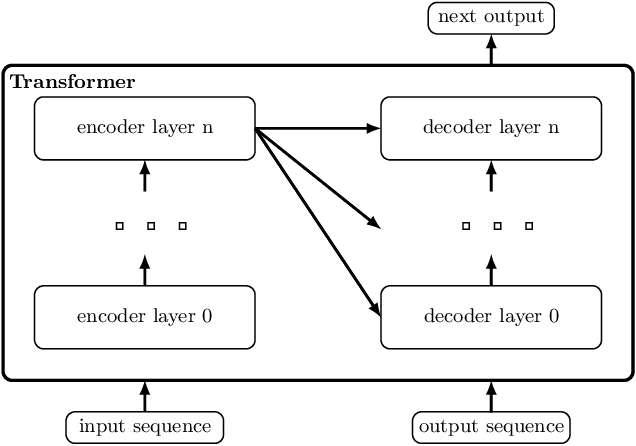

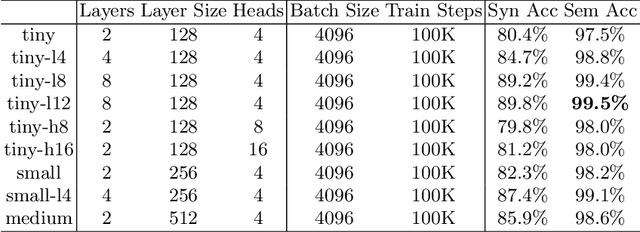

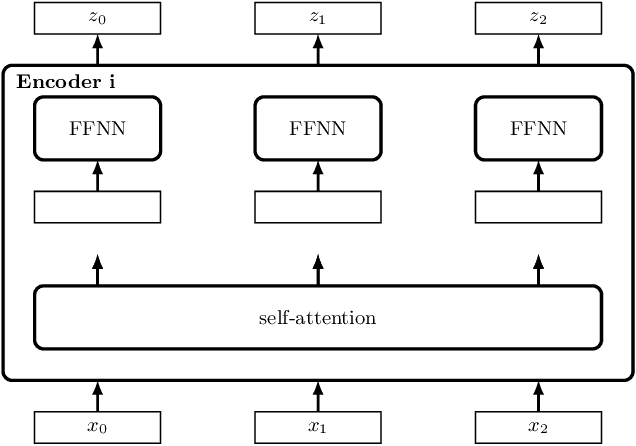

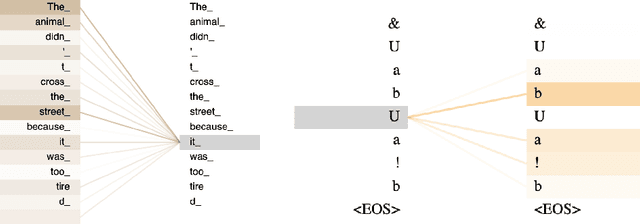

We show that a deep neural network can learn the semantics of linear-time temporal logic (LTL). As a challenging task that requires deep understanding of the LTL semantics, we show that our network can solve the trace generation problem for LTL: given a satisfiable LTL formula, find a trace that satisfies the formula. We frame the trace generation problem for LTL as a translation task, i.e., to translate from formulas to satisfying traces, and train an off-the-shelf implementation of the Transformer, a recently introduced deep learning architecture proposed for solving natural language processing tasks. We provide a detailed analysis of our experimental results, comparing multiple hyperparameter settings and formula representations. After training for several hours on a single GPU the results were surprising: the Transformer returns the syntactically equivalent trace in 89% of the cases on a held-out test set. Most of the "mispredictions", however, (and overall more than 99% of the predicted traces) still satisfy the given LTL formula. In other words, the Transformer generalized from imperfect training data to the semantics of LTL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge