Teaching Neural Module Networks to Do Arithmetic

Paper and Code

Oct 06, 2022

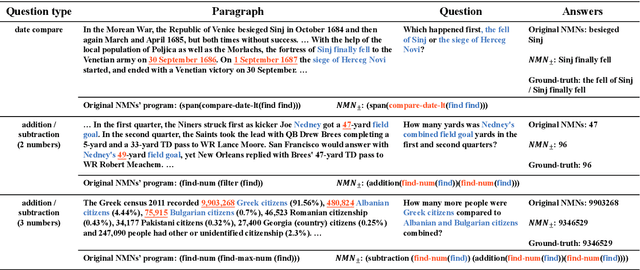

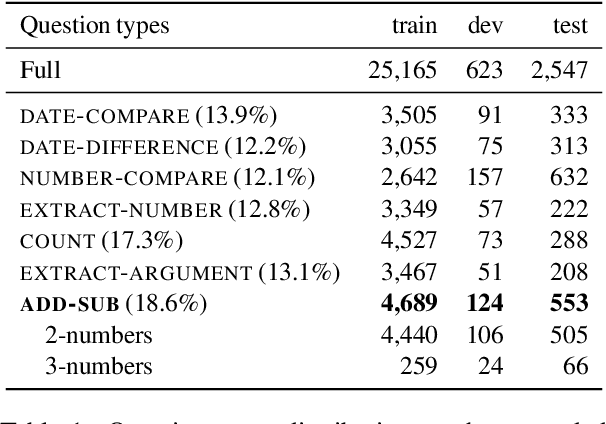

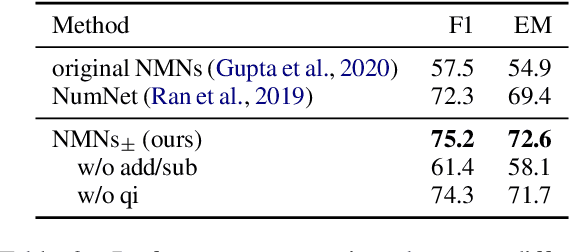

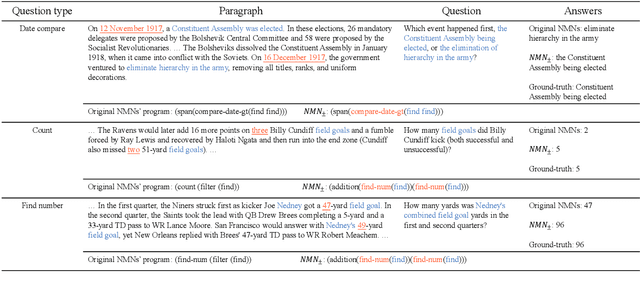

Answering complex questions that require multi-step multi-type reasoning over raw text is challenging, especially when conducting numerical reasoning. Neural Module Networks(NMNs), follow the programmer-interpreter framework and design trainable modules to learn different reasoning skills. However, NMNs only have limited reasoning abilities, and lack numerical reasoning capability. We up-grade NMNs by: (a) bridging the gap between its interpreter and the complex questions; (b) introducing addition and subtraction modules that perform numerical reasoning over numbers. On a subset of DROP, experimental results show that our proposed methods enhance NMNs' numerical reasoning skills by 17.7% improvement of F1 score and significantly outperform previous state-of-the-art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge