Tao: A Learning Framework for Adaptive Nearest Neighbor Search using Static Features Only

Paper and Code

Oct 02, 2021

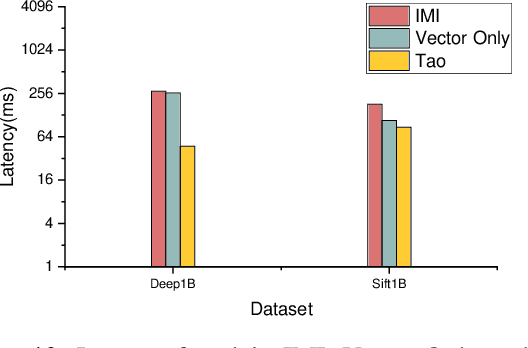

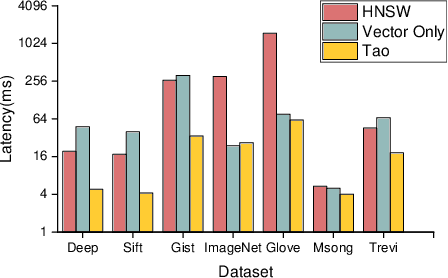

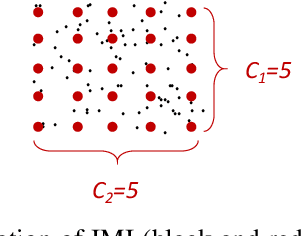

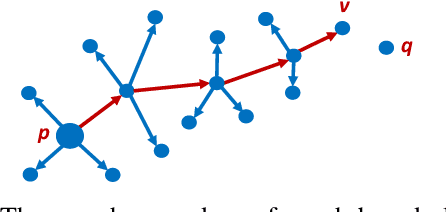

Approximate nearest neighbor (ANN) search is a fundamental problem in areas such as data management,information retrieval and machine learning. Recently, Li et al. proposed a learned approach named AdaptNN to support adaptive ANN query processing. In the middle of query execution, AdaptNN collects a number of runtime features and predicts termination condition for each individual query, by which better end-to-end latency is attained. Despite its efficiency, using runtime features complicates the learning process and leads to performance degradation. Radically different from AdaptNN, we argue that it is promising to predict termination condition before query exetution. Particularly, we developed Tao, a general learning framework for Terminating ANN queries Adaptively using Only static features. Upon the arrival of a query, Tao first maps the query to a local intrinsic dimension (LID) number, and then predicts the termination condition using LID instead of runtime features. By decoupling prediction procedure from query execution, Tao eliminates the laborious feature selection process involved in AdaptNN. Besides, two design principles are formulated to guide the application of Tao and improve the explainability of the prediction model. We integrate two state-of-the-art indexing approaches, i.e., IMI and HNSW, into Tao, and evaluate the performance over several million to billion-scale datasets. Experimental results show that, in addition to its simplicity and generality , Tao achieves up to 2.69x speedup even compared to its counterpart, at the same high accuracy targets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge