Structured Label Inference for Visual Understanding

Paper and Code

Feb 18, 2018

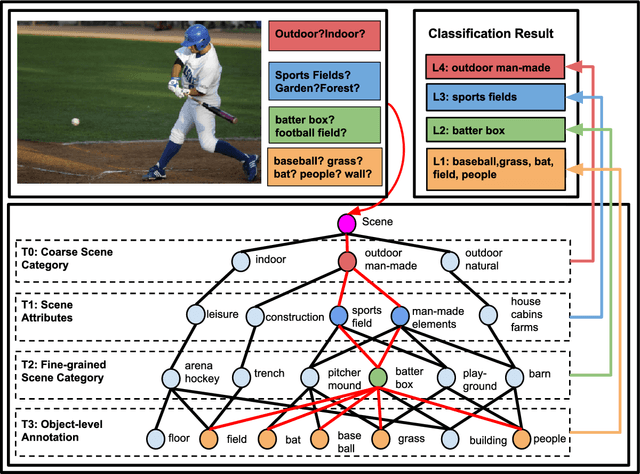

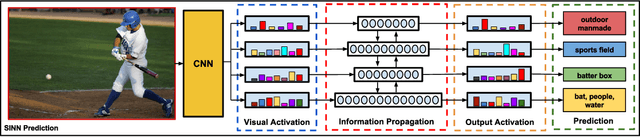

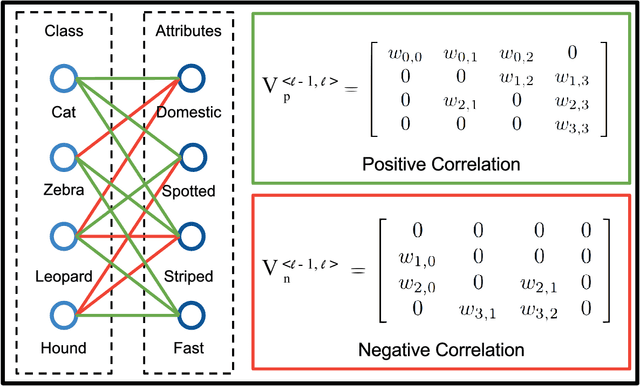

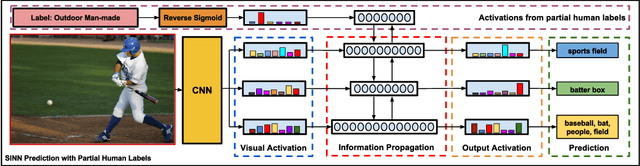

Visual data such as images and videos contain a rich source of structured semantic labels as well as a wide range of interacting components. Visual content could be assigned with fine-grained labels describing major components, coarse-grained labels depicting high level abstractions, or a set of labels revealing attributes. Such categorization over different, interacting layers of labels evinces the potential for a graph-based encoding of label information. In this paper, we exploit this rich structure for performing graph-based inference in label space for a number of tasks: multi-label image and video classification and action detection in untrimmed videos. We consider the use of the Bidirectional Inference Neural Network (BINN) and Structured Inference Neural Network (SINN) for performing graph-based inference in label space and propose a Long Short-Term Memory (LSTM) based extension for exploiting activity progression on untrimmed videos. The methods were evaluated on (i) the Animal with Attributes (AwA), Scene Understanding (SUN) and NUS-WIDE datasets for multi-label image classification, (ii) the first two releases of the YouTube-8M large scale dataset for multi-label video classification, and (iii) the THUMOS'14 and MultiTHUMOS video datasets for action detection. Our results demonstrate the effectiveness of structured label inference in these challenging tasks, achieving significant improvements against baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge