Stochastic Client Selection for Federated Learning with Volatile Clients

Paper and Code

Nov 17, 2020

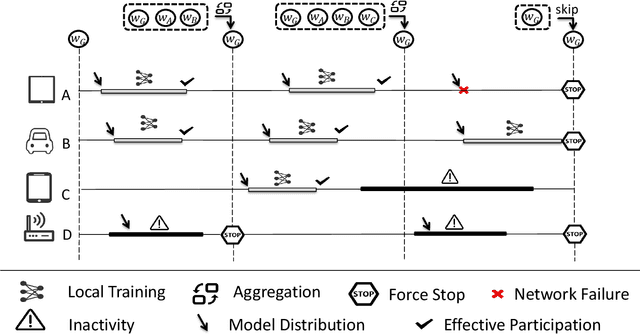

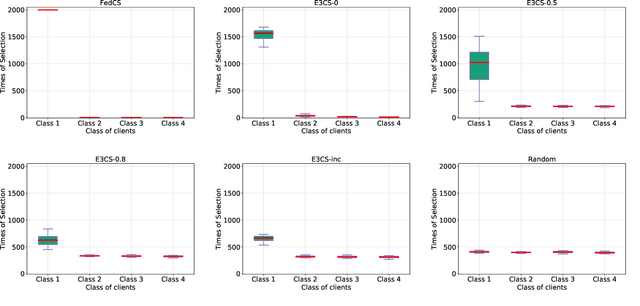

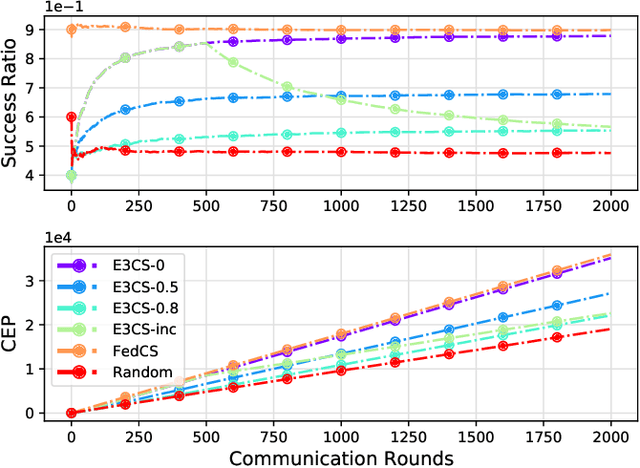

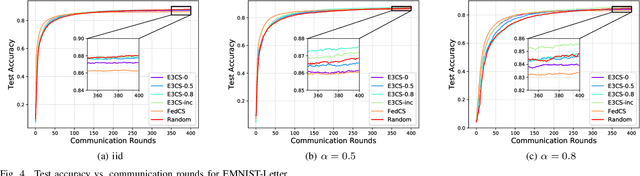

Federated Learning (FL), arising as a novel secure learning paradigm, has received notable attention from the public. In each round of synchronous FL training, only a fraction of available clients are chosen to participate and the selection of which might have a direct or indirect effect on the training efficiency, as well as the final model performance. In this paper, we investigate the client selection problem under a volatile context, in which the local training of heterogeneous clients is likely to fail due to various kinds of reasons and in different levels of frequency. Intuitively, too much training failure might potentially reduce the training efficiency and therefore should be regulated through proper selection of clients. Being inspired, effective participation under a deadline-based aggregation mechanism is modeled as the objective function in our problem model, and the fairness degree, another critical factor that might influence the training performance, is covered as an expected constraint. For an efficient settlement for the proposed selection problem, we propose E3CS, a stochastic client selection scheme on the basis of an adversarial bandit solution and we further corroborate its effectiveness by conducting real data-based experiments. According to the experimental results, under a proper setting, our proposed selection scheme is able to achieve at least 20 percent and up to 50 percent of acceleration to a fixed model accuracy while maintaining the same level of final model accuracy, in comparison to the vanilla selection scheme in FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge