STJLA: A Multi-Context Aware Spatio-Temporal Joint Linear Attention Network for Traffic Forecasting

Paper and Code

Dec 04, 2021

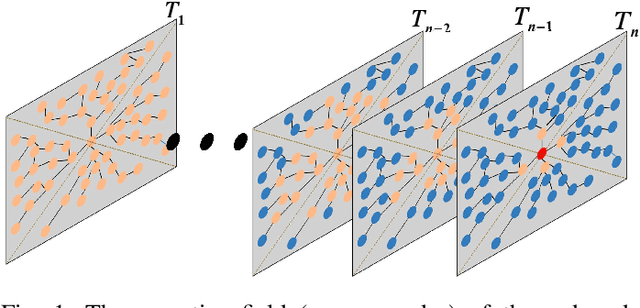

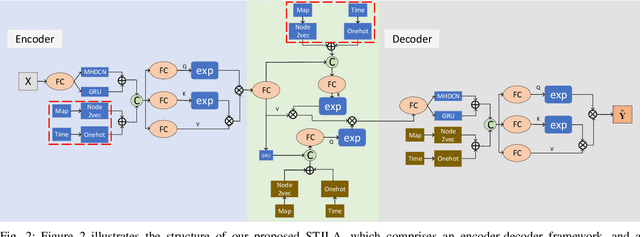

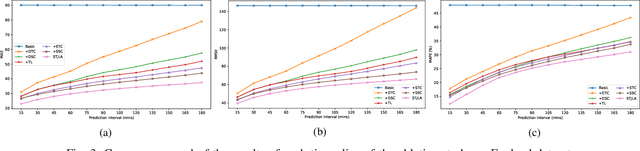

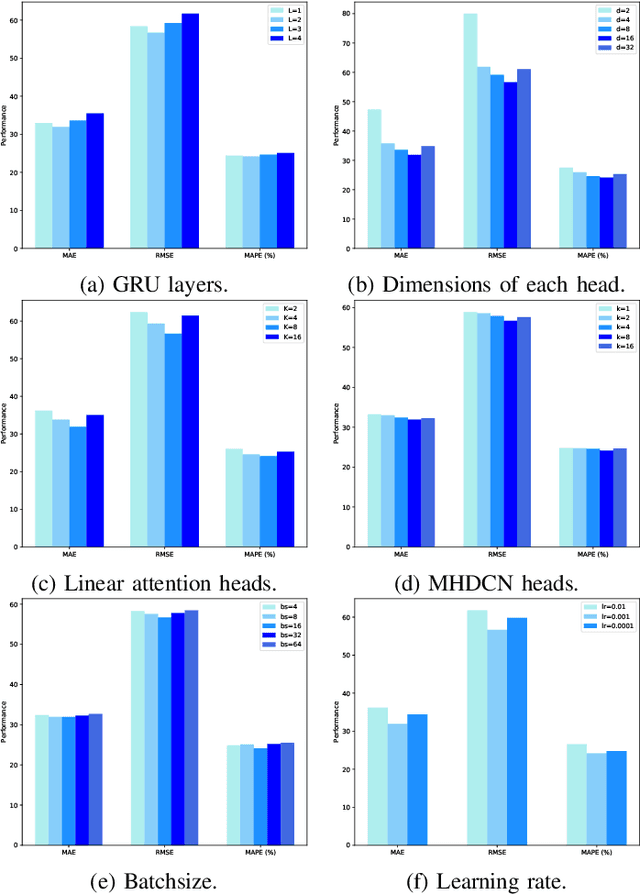

Traffic prediction has gradually attracted the attention of researchers because of the increase in traffic big data. Therefore, how to mine the complex spatio-temporal correlations in traffic data to predict traffic conditions more accurately become a difficult problem. Previous works combined graph convolution networks (GCNs) and self-attention mechanism with deep time series models (e.g. recurrent neural networks) to capture the spatio-temporal correlations separately, ignoring the relationships across time and space. Besides, GCNs are limited by over-smoothing issue and self-attention is limited by quadratic problem, result in GCNs lack global representation capabilities, and self-attention inefficiently capture the global spatial dependence. In this paper, we propose a novel deep learning model for traffic forecasting, named Multi-Context Aware Spatio-Temporal Joint Linear Attention (STJLA), which applies linear attention to the spatio-temporal joint graph to capture global dependence between all spatio-temporal nodes efficiently. More specifically, STJLA utilizes static structural context and dynamic semantic context to improve model performance. The static structure context based on node2vec and one-hot encoding enriches the spatio-temporal position information. Furthermore, the multi-head diffusion convolution network based dynamic spatial context enhances the local spatial perception ability, and the GRU based dynamic temporal context stabilizes sequence position information of the linear attention, respectively. Experiments on two real-world traffic datasets, England and PEMSD7, demonstrate that our STJLA can achieve up to 9.83% and 3.08% accuracy improvement in MAE measure over state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge