Stitched ViTs are Flexible Vision Backbones

Paper and Code

Jun 30, 2023

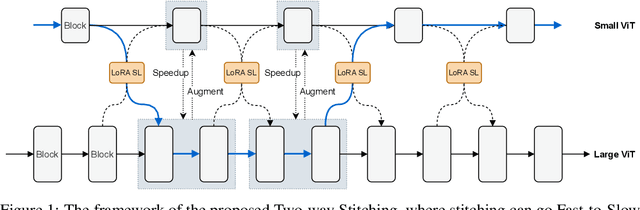

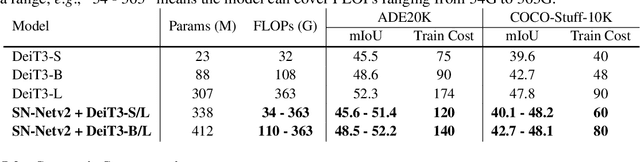

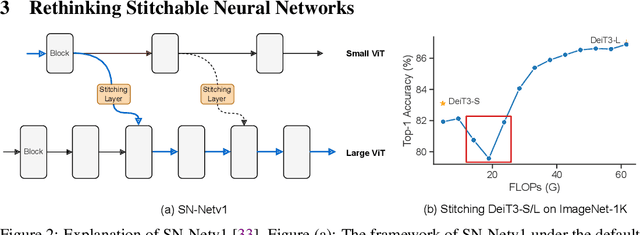

Large pretrained plain vision Transformers (ViTs) have been the workhorse for many downstream tasks. However, existing works utilizing off-the-shelf ViTs are inefficient in terms of training and deployment, because adopting ViTs with individual sizes requires separate training and is restricted by fixed performance-efficiency trade-offs. In this paper, we are inspired by stitchable neural networks, which is a new framework that cheaply produces a single model that covers rich subnetworks by stitching pretrained model families, supporting diverse performance-efficiency trade-offs at runtime. Building upon this foundation, we introduce SN-Netv2, a systematically improved model stitching framework to facilitate downstream task adaptation. Specifically, we first propose a Two-way stitching scheme to enlarge the stitching space. We then design a resource-constrained sampling strategy that takes into account the underlying FLOPs distributions in the space for improved sampling. Finally, we observe that learning stitching layers is a low-rank update, which plays an essential role on downstream tasks to stabilize training and ensure a good Pareto frontier. With extensive experiments on ImageNet-1K, ADE20K, COCO-Stuff-10K, NYUv2 and COCO-2017, SN-Netv2 demonstrates strong ability to serve as a flexible vision backbone, achieving great advantages in both training efficiency and adaptation. Code will be released at https://github.com/ziplab/SN-Netv2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge