Statistical Selection of CNN-Based Audiovisual Features for Instantaneous Estimation of Human Emotional States

Paper and Code

Aug 23, 2017

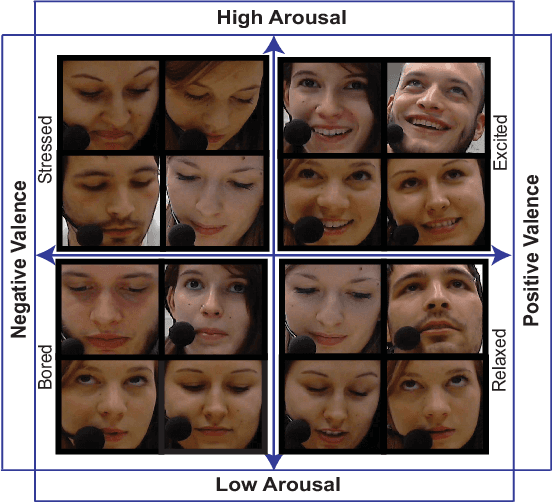

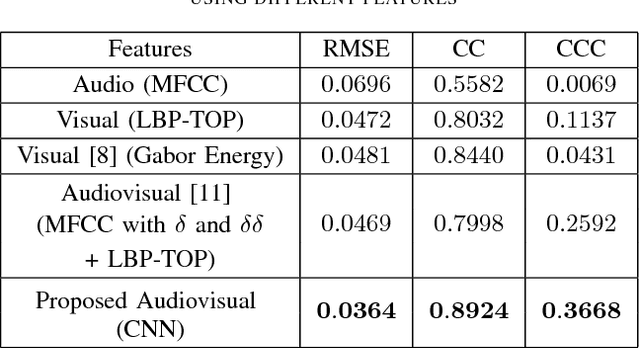

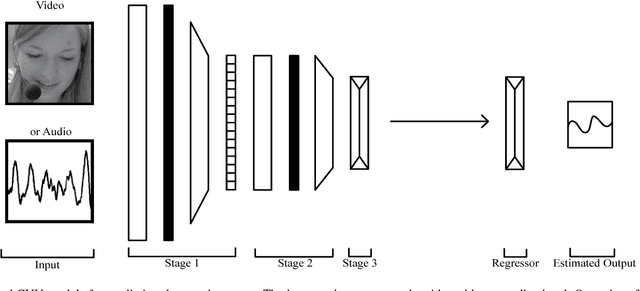

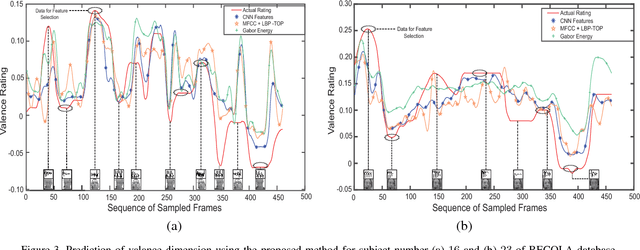

Automatic prediction of continuous-level emotional state requires selection of suitable affective features to develop a regression system based on supervised machine learning. This paper investigates the performance of features statistically learned using convolutional neural networks for instantaneously predicting the continuous dimensions of emotional states. Features with minimum redundancy and maximum relevancy are chosen by using the mutual information-based selection process. The performance of frame-by-frame prediction of emotional state using the moderate length features as proposed in this paper is evaluated on spontaneous and naturalistic human-human conversation of RECOLA database. Experimental results show that the proposed model can be used for instantaneous prediction of emotional state with an accuracy higher than traditional audio or video features that are used for affective computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge