Speech2Properties2Gestures: Gesture-Property Prediction as a Tool for Generating Representational Gestures from Speech

Paper and Code

Jun 28, 2021

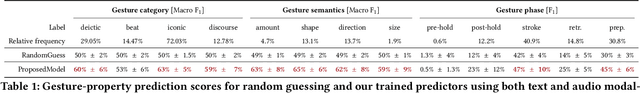

We propose a new framework for gesture generation, aiming to allow data-driven approaches to produce more semantically rich gestures. Our approach first predicts whether to gesture, followed by a prediction of the gesture properties. Those properties are then used as conditioning for a modern probabilistic gesture-generation model capable of high-quality output. This empowers the approach to generate gestures that are both diverse and representational.

* Accepted for publication at the ACM International Conference on

Intelligent Virtual Agents (IVA 2021)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge