Spatio-Temporal-based Context Fusion for Video Anomaly Detection

Paper and Code

Oct 18, 2022

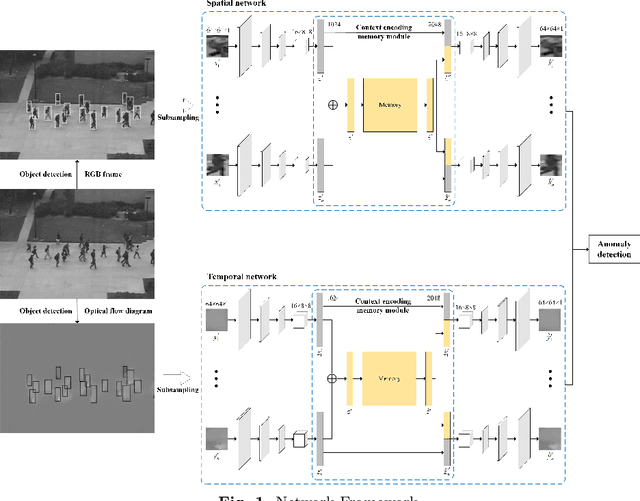

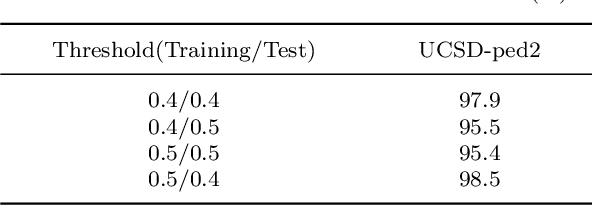

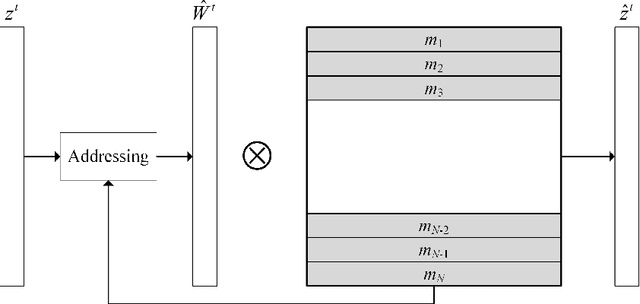

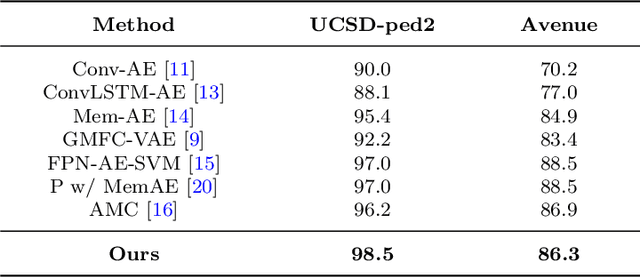

Video anomaly detection aims to discover abnormal events in videos, and the principal objects are target objects such as people and vehicles. Each target in the video data has rich spatio-temporal context information. Most existing methods only focus on the temporal context, ignoring the role of the spatial context in anomaly detection. The spatial context information represents the relationship between the detection target and surrounding targets. Anomaly detection makes a lot of sense. To this end, a video anomaly detection algorithm based on target spatio-temporal context fusion is proposed. Firstly, the target in the video frame is extracted through the target detection network to reduce background interference. Then the optical flow map of two adjacent frames is calculated. Motion features are used multiple targets in the video frame to construct spatial context simultaneously, re-encoding the target appearance and motion features, and finally reconstructing the above features through the spatio-temporal dual-stream network, and using the reconstruction error to represent the abnormal score. The algorithm achieves frame-level AUCs of 98.5% and 86.3% on the UCSDped2 and Avenue datasets, respectively. On the UCSDped2 dataset, the spatio-temporal dual-stream network improves frames by 5.1% and 0.3%, respectively, compared to the temporal and spatial stream networks. After using spatial context encoding, the frame-level AUC is enhanced by 1%, which verifies the method's effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge