Sparse Pedestrian Character Learning for Trajectory Prediction

Paper and Code

Nov 27, 2023

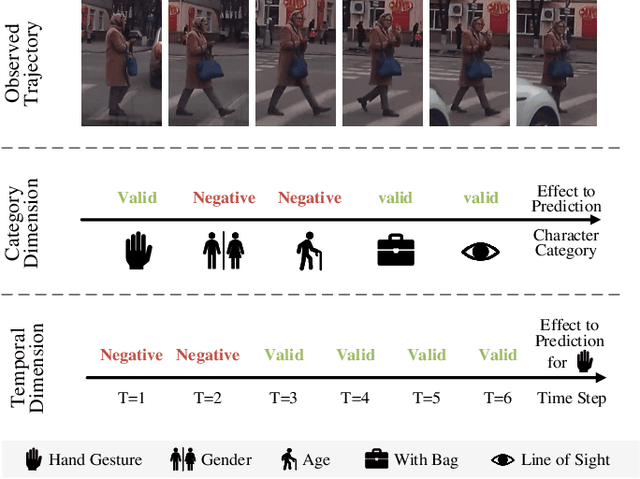

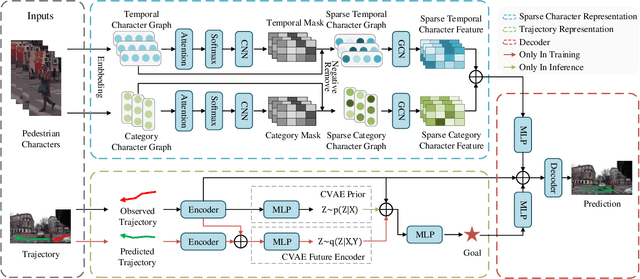

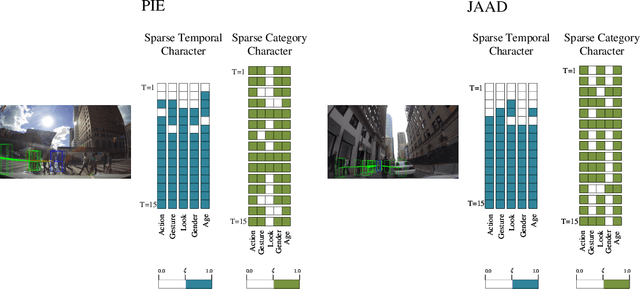

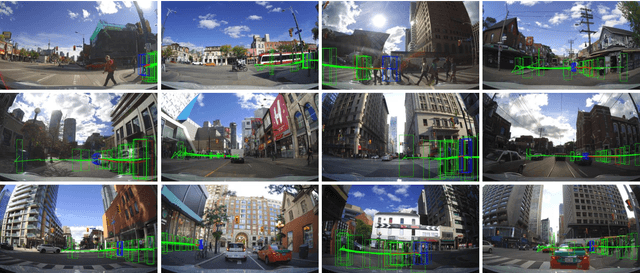

Pedestrian trajectory prediction in a first-person view has recently attracted much attention due to its importance in autonomous driving. Recent work utilizes pedestrian character information, \textit{i.e.}, action and appearance, to improve the learned trajectory embedding and achieves state-of-the-art performance. However, it neglects the invalid and negative pedestrian character information, which is harmful to trajectory representation and thus leads to performance degradation. To address this issue, we present a two-stream sparse-character-based network~(TSNet) for pedestrian trajectory prediction. Specifically, TSNet learns the negative-removed characters in the sparse character representation stream to improve the trajectory embedding obtained in the trajectory representation stream. Moreover, to model the negative-removed characters, we propose a novel sparse character graph, including the sparse category and sparse temporal character graphs, to learn the different effects of various characters in category and temporal dimensions, respectively. Extensive experiments on two first-person view datasets, PIE and JAAD, show that our method outperforms existing state-of-the-art methods. In addition, ablation studies demonstrate different effects of various characters and prove that TSNet outperforms approaches without eliminating negative characters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge