SoK: Towards Security and Safety of Edge AI

Paper and Code

Oct 07, 2024

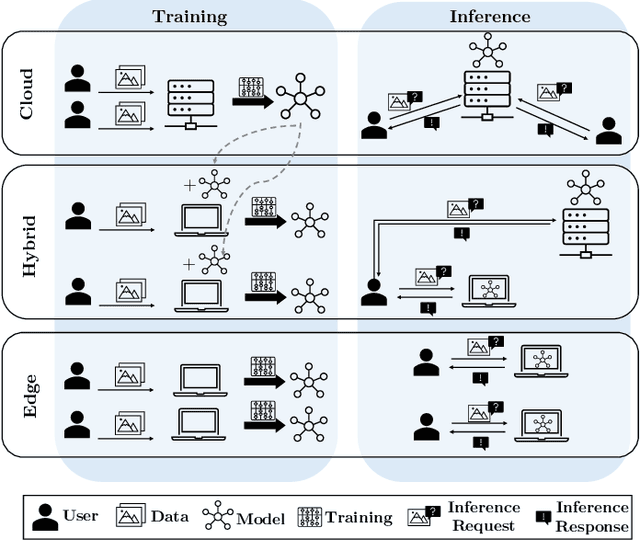

Advanced AI applications have become increasingly available to a broad audience, e.g., as centrally managed large language models (LLMs). Such centralization is both a risk and a performance bottleneck - Edge AI promises to be a solution to these problems. However, its decentralized approach raises additional challenges regarding security and safety. In this paper, we argue that both of these aspects are critical for Edge AI, and even more so, their integration. Concretely, we survey security and safety threats, summarize existing countermeasures, and collect open challenges as a call for more research in this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge