SMART: Skeletal Motion Action Recognition aTtack

Paper and Code

Nov 21, 2019

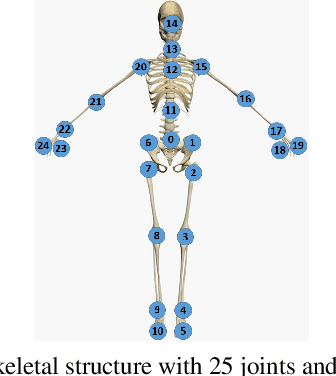

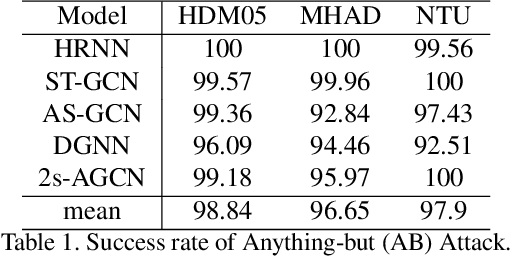

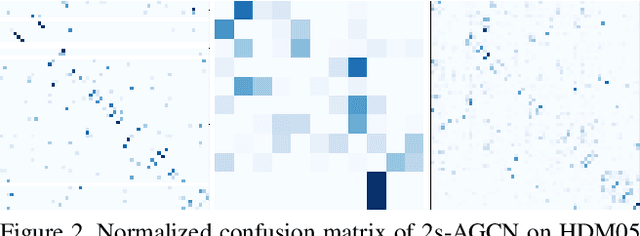

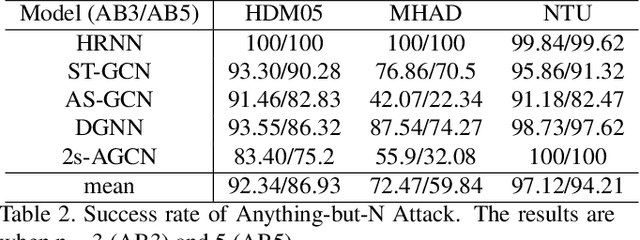

Adversarial attack has inspired great interest in computer vision, by showing that classification-based solutions are prone to imperceptible attack in many tasks. In this paper, we propose a method, SMART, to attack action recognizers which rely on 3D skeletal motions. Our method involves an innovative perceptual loss which ensures the imperceptibility of the attack. Empirical studies demonstrate that SMART is effective in both white-box and black-box scenarios. Its generalizability is evidenced on a variety of action recognizers and datasets. Its versatility is shown in different attacking strategies. Its deceitfulness is proven in extensive perceptual studies. Finally, SMART shows that adversarial attack on 3D skeletal motion, one type of time-series data, is significantly different from traditional adversarial attack problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge