SlovakBERT: Slovak Masked Language Model

Paper and Code

Sep 30, 2021

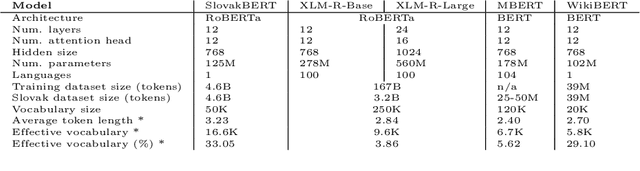

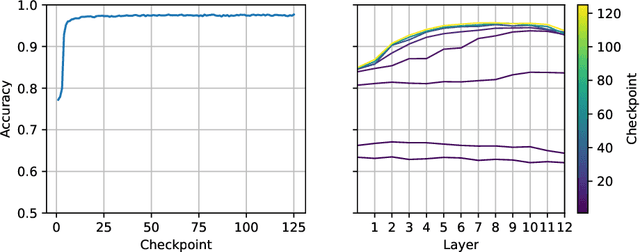

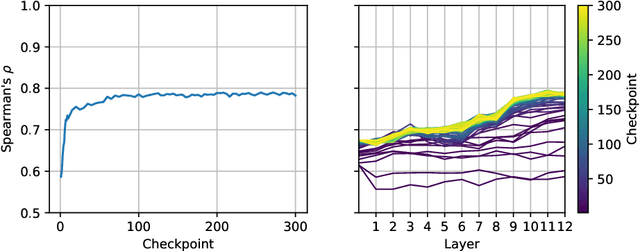

We introduce a new Slovak masked language model called SlovakBERT in this paper. It is the first Slovak-only transformers-based model trained on a sizeable corpus. We evaluate the model on several NLP tasks and achieve state-of-the-art results. We publish the masked language model, as well as the subsequently fine-tuned models for part-of-speech tagging, sentiment analysis and semantic textual similarity.

* 22 pages, 2 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge