Sketch-Specific Data Augmentation for Freehand Sketch Recognition

Paper and Code

Oct 14, 2019

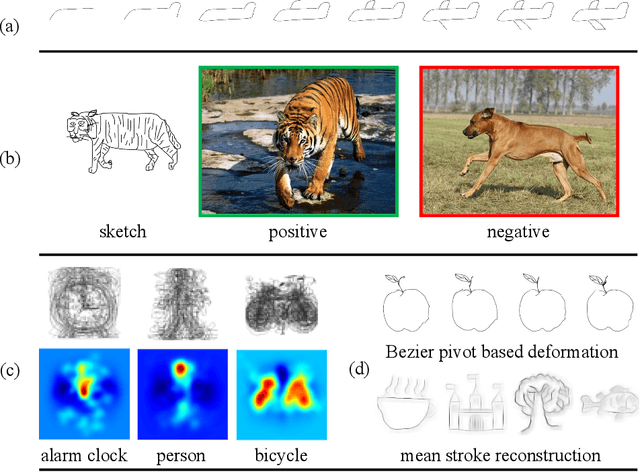

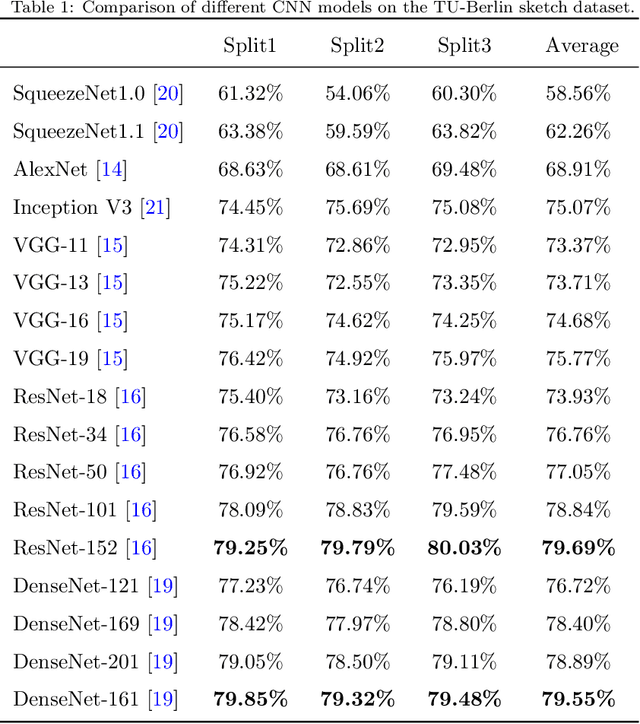

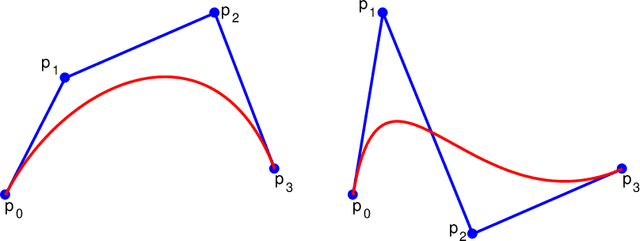

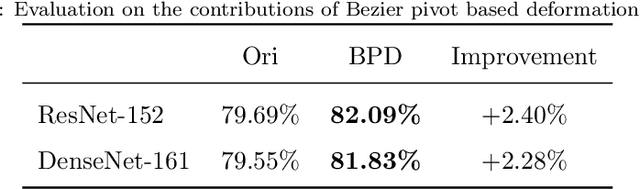

Sketch recognition remains a significant challenge due to the limited training data and the substantial intra-class variance of freehand sketches for the same object. Conventional methods for this task often rely on the availability of the temporal order of sketch strokes, additional cues acquired from different modalities and supervised augmentation of sketch datasets with real images, which also limit the applicability and feasibility of these methods in real scenarios. In this paper, we propose a novel sketch-specific data augmentation (SSDA) method that leverages the quantity and quality of the sketches automatically. From the aspect of quantity, we introduce a Bezier pivot based deformation (BPD) strategy to enrich the training data. Towards quality improvement, we present a mean stroke reconstruction (MSR) approach to generate a set of novel types of sketches with smaller intra-class variances. Both of these solutions are unrestricted from any multi-source data and temporal cues of sketches. Furthermore, we show that some recent deep convolutional neural network models that are trained on generic classes of real images can be better choices than most of the elaborate architectures that are designed explicitly for sketch recognition. As SSDA can be integrated with any convolutional neural networks, it has a distinct advantage over the existing methods. Our extensive experimental evaluations demonstrate that the proposed method achieves state-of-the-art results (84.27%) on the TU-Berlin dataset, outperforming the human performance by a remarkable 11.17% increase. We also present a new benchmark named Sketchy-R to facilitate future research in sketch recognition. Finally, more experiments show the practical value of our approach to the task of sketch-based image retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge