Simulation Results on Selector Adaptation in Behavior Trees

Paper and Code

Jun 30, 2016

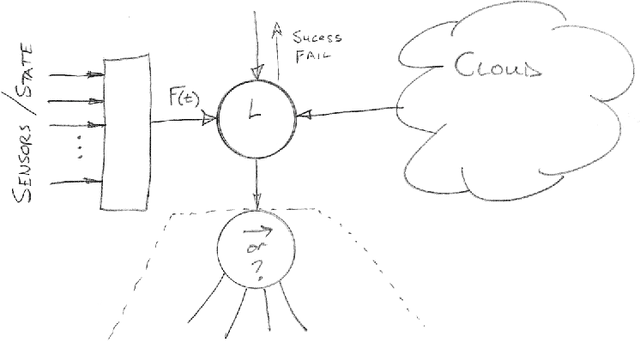

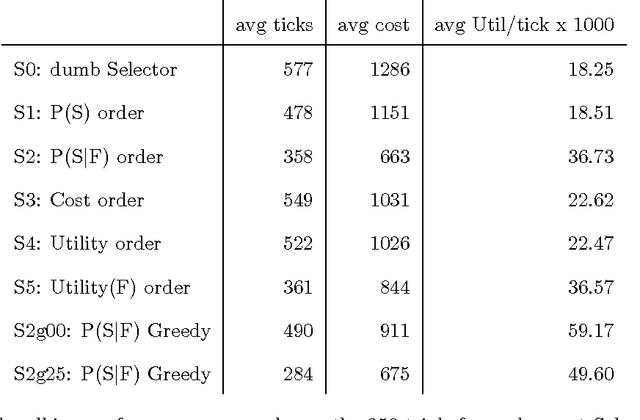

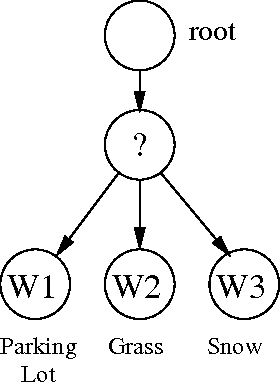

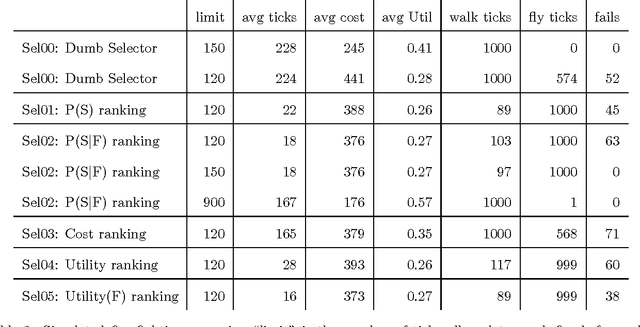

Behavior trees (BTs) emerged from video game development as a graphical language for modeling intelligent agent behavior. However as initially implemented, behavior trees are static plans. This paper adds to recent literature exploring the ability of BTs to adapt to their success or failure in achieving tasks. The "Selector" node of a BT tries alternative strategies (its children) and returns success only if all of its children return failure. This paper studies several means by which Selector nodes can learn from experience, in particular, learn conditional probabilities of success based on sensor information, and modify the execution order based on the learned iformation. Furthermore, a "Greedy Selector" is studied which only tries the child having the highest success probability. Simulation results indicate significantly increased task performance, especially when frequentist probability estimate is conditioned on sensor information. The Greedy selector was ineffective unless it was preceded by a period of training in which all children were exercised.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge