Shapeshifter Networks: Cross-layer Parameter Sharing for Scalable and Effective Deep Learning

Paper and Code

Jun 18, 2020

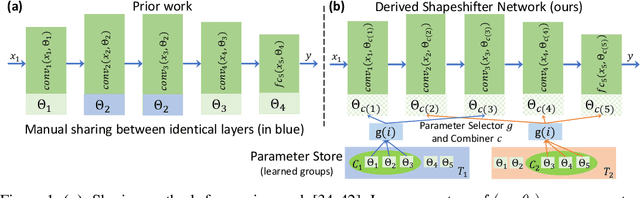

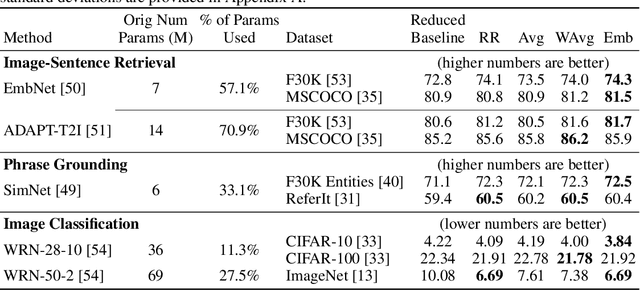

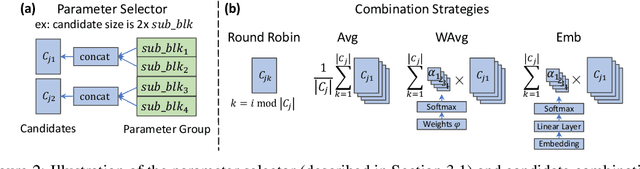

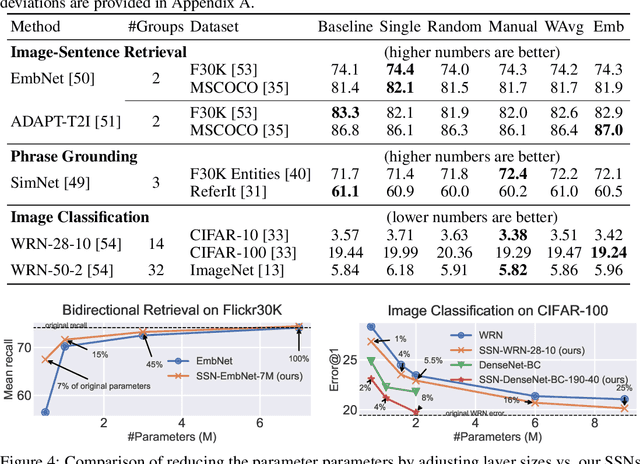

We present Shapeshifter Networks (SSNs), a flexible neural network framework that improves performance and reduces memory requirements on a diverse set of scenarios over standard neural networks. Our approach is based on the observation that many neural networks are severely overparameterized, resulting in significant waste in computational resources as well as being susceptible to overfitting. SSNs address this by learning where and how to share parameters between layers in a neural network while avoiding degenerate solutions that result in underfitting. Specifically, we automatically construct parameter groups that identify where parameter sharing is most beneficial. Then, we map each group's weights to construct layers with learned combinations of candidates from a shared parameter pool. SSNs can share parameters across layers even when they have different sizes, perform different operations, and/or operate on features from different modalities. We evaluate our approach on a diverse set of tasks, including image classification, bidirectional image-sentence retrieval, and phrase grounding, creating high performing models even when using as little as 1% of the parameters. We also apply SSNs to knowledge distillation, where we obtain state-of-the-art results when combined with traditional distillation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge