Separable Operator Networks

Paper and Code

Jul 15, 2024

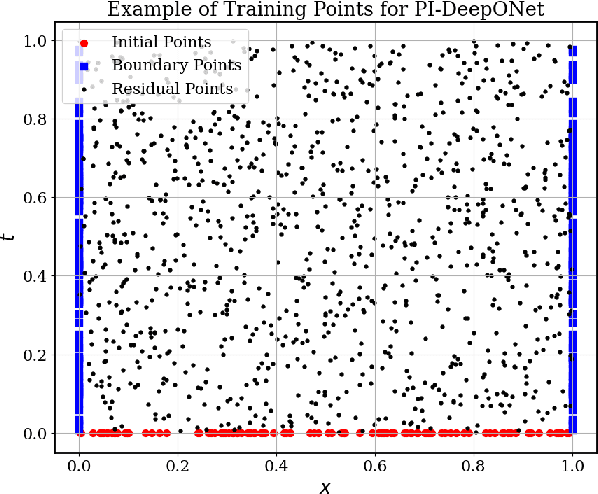

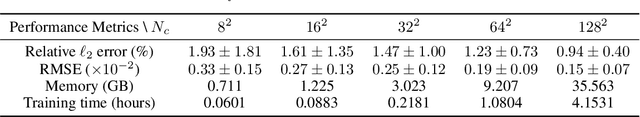

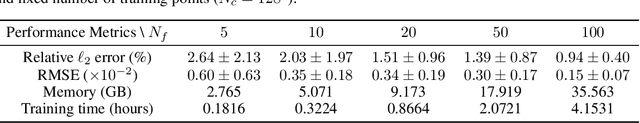

Operator learning has become a powerful tool in machine learning for modeling complex physical systems. Although Deep Operator Networks (DeepONet) show promise, they require extensive data acquisition. Physics-informed DeepONets (PI-DeepONet) mitigate data scarcity but suffer from inefficient training processes. We introduce Separable Operator Networks (SepONet), a novel framework that significantly enhances the efficiency of physics-informed operator learning. SepONet uses independent trunk networks to learn basis functions separately for different coordinate axes, enabling faster and more memory-efficient training via forward-mode automatic differentiation. We provide theoretical guarantees for SepONet using the universal approximation theorem and validate its performance through comprehensive benchmarking against PI-DeepONet. Our results demonstrate that for the 1D time-dependent advection equation, when targeting a mean relative $\ell_{2}$ error of less than 6% on 100 unseen variable coefficients, SepONet provides up to $112 \times$ training speed-up and $82 \times$ GPU memory usage reduction compared to PI-DeepONet. Similar computational advantages are observed across various partial differential equations, with SepONet's efficiency gains scaling favorably as problem complexity increases. This work paves the way for extreme-scale learning of continuous mappings between infinite-dimensional function spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge