Sensing accident-prone features in urban scenes for proactive driving and accident prevention

Paper and Code

Feb 25, 2022

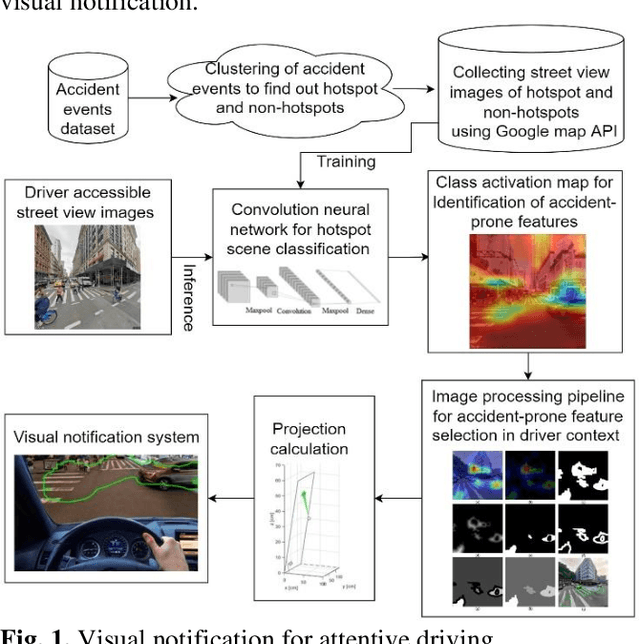

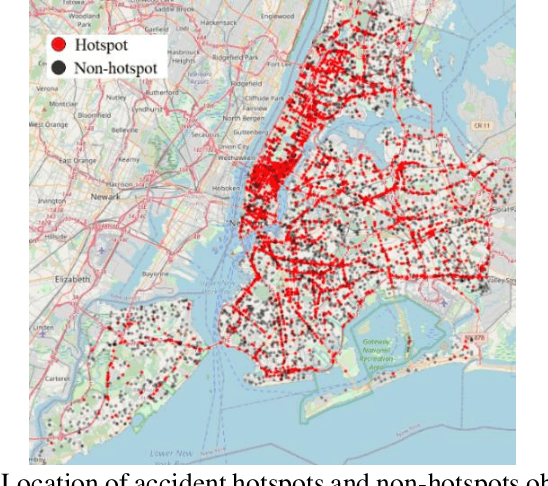

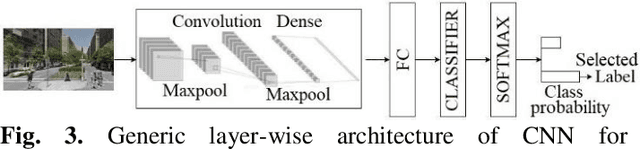

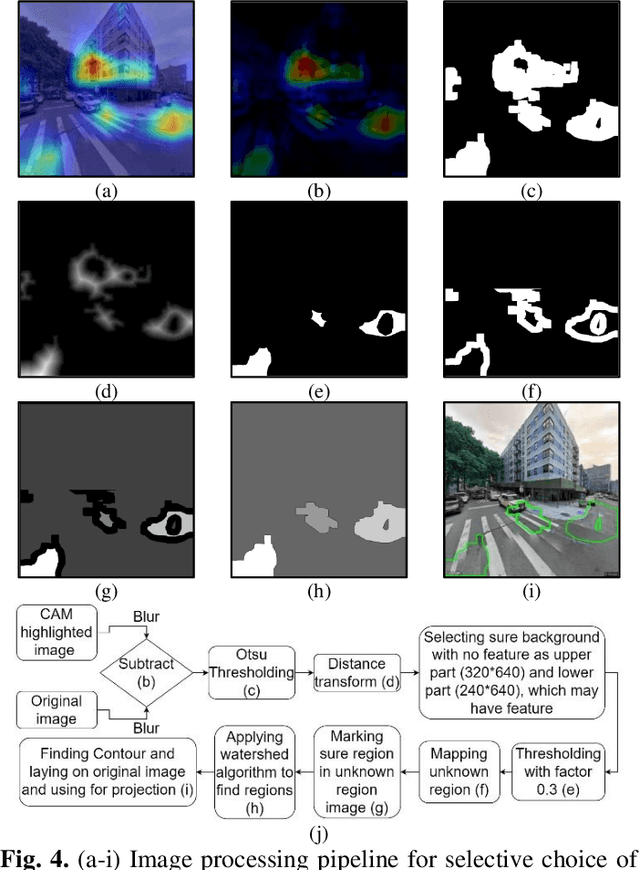

In urban cities, visual information along and on roadways is likely to distract drivers and leads to missing traffic signs and other accident-prone features. As a solution to avoid accidents due to missing these visual cues, this paper proposes a visual notification of accident-prone features to drivers, based on real-time images obtained via dashcam. For this purpose, Google Street View images around accident hotspots (areas of dense accident occurrence) identified by accident dataset are used to train a family of deep convolutional neural networks (CNNs). Trained CNNs are able to detect accident-prone features and classify a given urban scene into an accident hotspot and a non-hotspot (area of sparse accident occurrence). For given accident hotspot, the trained CNNs can classify it into an accident hotspot with the accuracy up to 90%. The capability of detecting accident-prone features by the family of CNNs is analyzed by a comparative study of four different class activation map (CAM) methods, which are used to inspect specific accident-prone features causing the decision of CNNs, and pixel-level object class classification. The outputs of CAM methods are processed by an image processing pipeline to extract only the accident-prone features that are explainable to drivers with the help of visual notification system. To prove the efficacy of accident-prone features, an ablation study is conducted. Ablation of accident-prone features taking 7.7%, on average, of total area in each image sample causes up to 13.7% more chance of given area to be classified as a non-hotspot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge