Semantic Ensemble Loss and Latent Refinement for High-Fidelity Neural Image Compression

Paper and Code

Jan 25, 2024

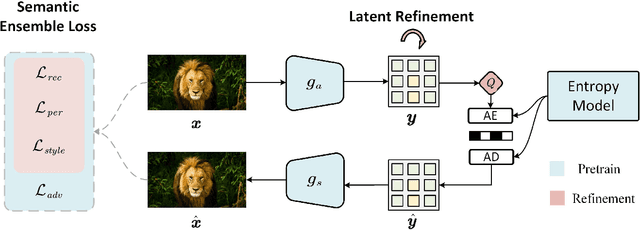

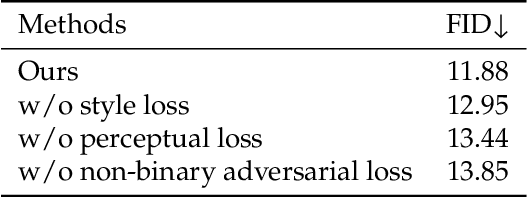

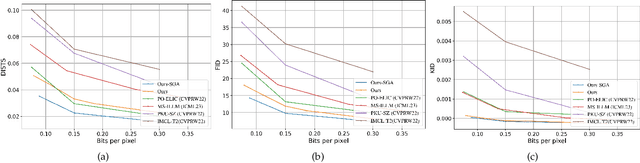

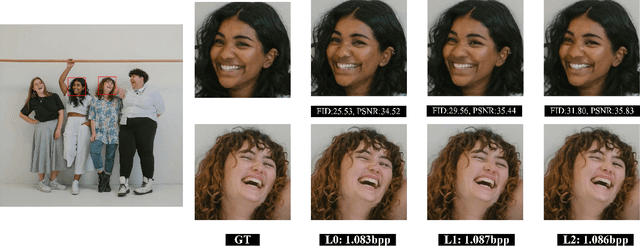

Recent advancements in neural compression have surpassed traditional codecs in PSNR and MS-SSIM measurements. However, at low bit-rates, these methods can introduce visually displeasing artifacts, such as blurring, color shifting, and texture loss, thereby compromising perceptual quality of images. To address these issues, this study presents an enhanced neural compression method designed for optimal visual fidelity. We have trained our model with a sophisticated semantic ensemble loss, integrating Charbonnier loss, perceptual loss, style loss, and a non-binary adversarial loss, to enhance the perceptual quality of image reconstructions. Additionally, we have implemented a latent refinement process to generate content-aware latent codes. These codes adhere to bit-rate constraints, balance the trade-off between distortion and fidelity, and prioritize bit allocation to regions of greater importance. Our empirical findings demonstrate that this approach significantly improves the statistical fidelity of neural image compression. On CLIC2024 validation set, our approach achieves a 62% bitrate saving compared to MS-ILLM under FID metric.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge