Self-supervised 3D Point Cloud Completion via Multi-view Adversarial Learning

Paper and Code

Jul 13, 2024

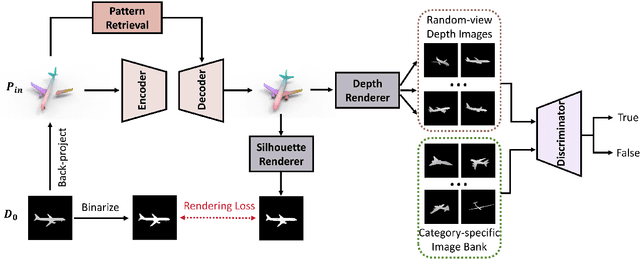

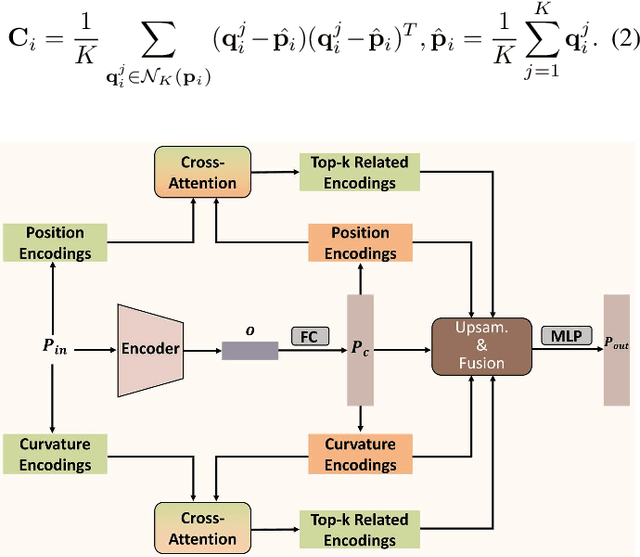

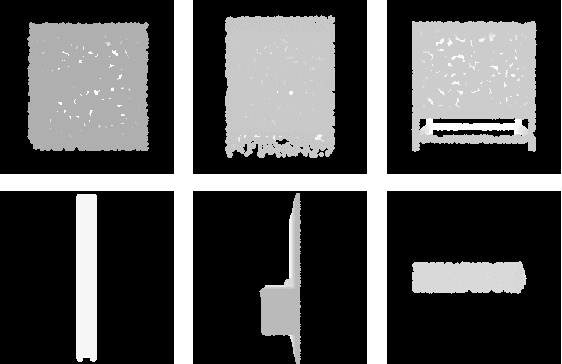

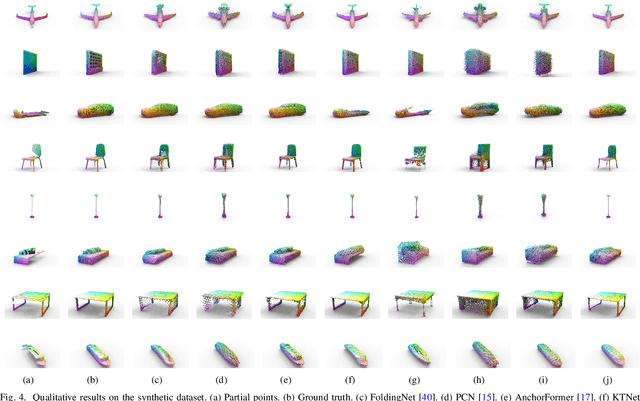

In real-world scenarios, scanned point clouds are often incomplete due to occlusion issues. The task of self-supervised point cloud completion involves reconstructing missing regions of these incomplete objects without the supervision of complete ground truth. Current self-supervised methods either rely on multiple views of partial observations for supervision or overlook the intrinsic geometric similarity that can be identified and utilized from the given partial point clouds. In this paper, we propose MAL-SPC, a framework that effectively leverages both object-level and category-specific geometric similarities to complete missing structures. Our MAL-SPC does not require any 3D complete supervision and only necessitates a single partial point cloud for each object. Specifically, we first introduce a Pattern Retrieval Network to retrieve similar position and curvature patterns between the partial input and the predicted shape, then leverage these similarities to densify and refine the reconstructed results. Additionally, we render the reconstructed complete shape into multi-view depth maps and design an adversarial learning module to learn the geometry of the target shape from category-specific single-view depth images. To achieve anisotropic rendering, we design a density-aware radius estimation algorithm to improve the quality of the rendered images. Our MAL-SPC yields the best results compared to current state-of-the-art methods.We will make the source code publicly available at \url{https://github.com/ltwu6/malspc

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge