Self-Enhanced GNN: Improving Graph Neural Networks Using Model Outputs

Paper and Code

Mar 16, 2020

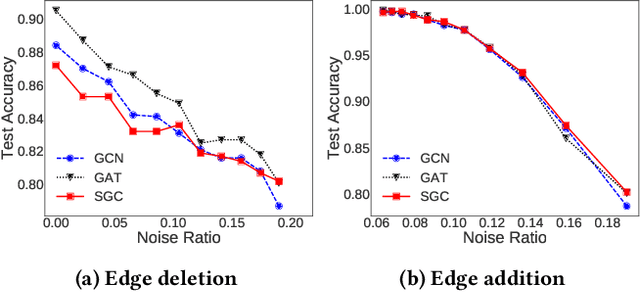

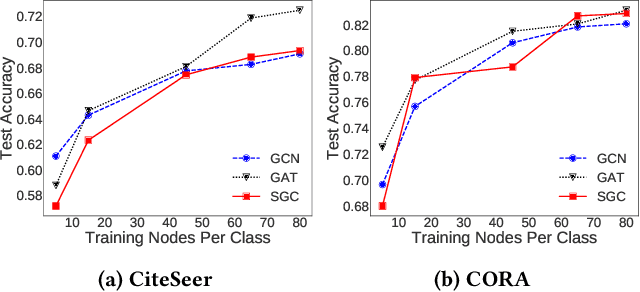

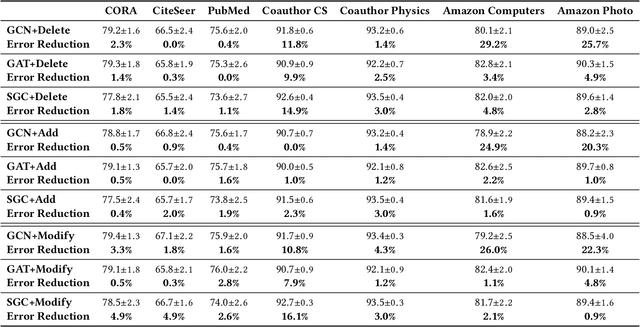

Graph neural networks (GNNs) have received much attention recently because of their excellent performance on graph-based tasks. However, existing research on GNNs focuses on designing more effective models without considering much the quality of the input data itself. In this paper, we propose self-enhanced GNN, which improves the quality of the input data using the outputs of existing GNN models for better performance on semi-supervised node classification. As graph data consist of both topology and node labels, we improve input data quality from both perspectives. For topology, we observe that higher classification accuracy can be achieved when the ratio of inter-class edges (connecting nodes from different classes) is low and propose topology update to remove inter-class edges and add intra-class edges. For node labels, we propose training node augmentation, which enlarges the training set using the labels predicted by existing GNN models. As self-enhanced GNN improves the quality of the input graph data, it is general and can be easily combined with existing GNN models. Experimental results on three well-known GNN models and seven popular datasets show that self-enhanced GNN consistently improves the performance of the three models. The reduction in classification error is 16.2% on average and can be as high as 35.1%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge