Seeing Through The Noisy Dark: Toward Real-world Low-Light Image Enhancement and Denoising

Paper and Code

Oct 07, 2022

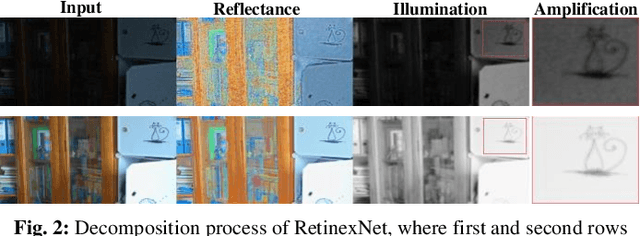

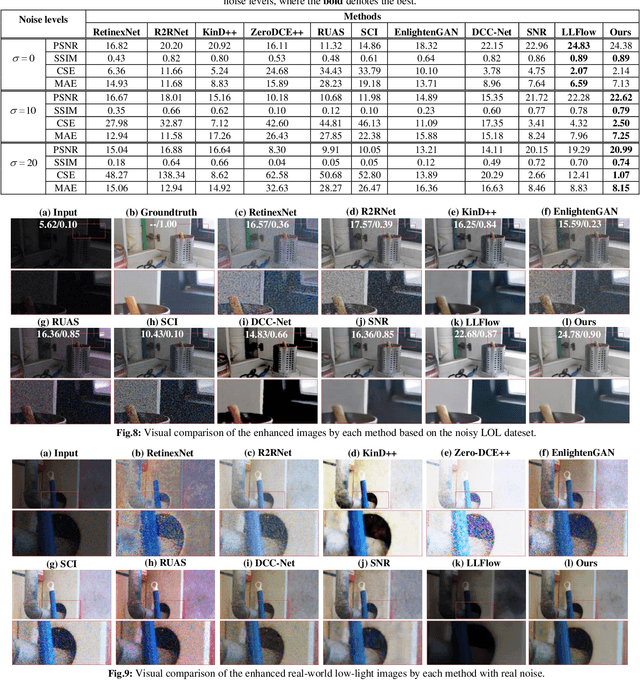

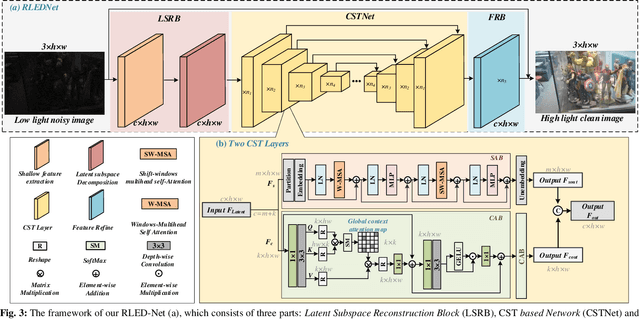

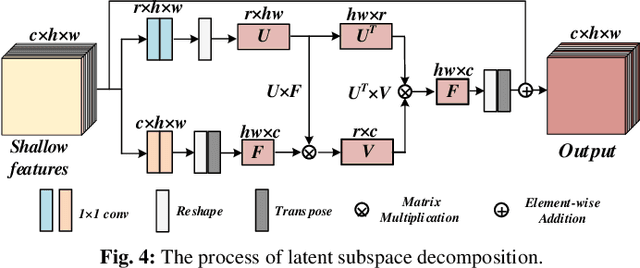

Images collected in real-world low-light environment usually suffer from lower visibility and heavier noise, due to the insufficient light or hardware limitation. While existing low-light image enhancement (LLIE) methods basically ignored the noise interference and mainly focus on refining the illumination of the low-light images based on benchmarked noise-negligible datasets. Such operations will make them inept for the real-world LLIE (RLLIE) with heavy noise, and result in speckle noise and blur in the enhanced images. Although several LLIE methods considered the noise in low-light image, they are trained on the raw data and hence cannot be used for sRGB images, since the domains of data are different and lack of expertise or unknown protocols. In this paper, we clearly consider the task of seeing through the noisy dark in sRGB color space, and propose a novel end-to-end method termed Real-world Low-light Enhancement & Denoising Network (RLED-Net). Since natural images can usually be characterized by low-rank subspaces in which the redundant information and noise can be removed, we design a Latent Subspace Reconstruction Block (LSRB) for feature extraction and denoising. To reduce the loss of global feature (e.g., color/shape information) and extract more accurate local features (e.g., edge/texture information), we also present a basic layer with two branches, called Cross-channel & Shift-window Transformer (CST). Based on the CST, we further present a new backbone to design a U-structure Network (CSTNet) for deep feature recovery, and also design a Feature Refine Block (FRB) to refine the final features. Extensive experiments on real noisy images and public databases verified the effectiveness of our RLED-Net for both RLLIE and denoising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge