Searching Transferable Mixed-Precision Quantization Policy through Large Margin Regularization

Paper and Code

Feb 14, 2023

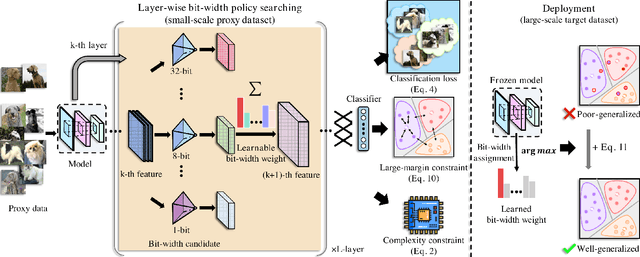

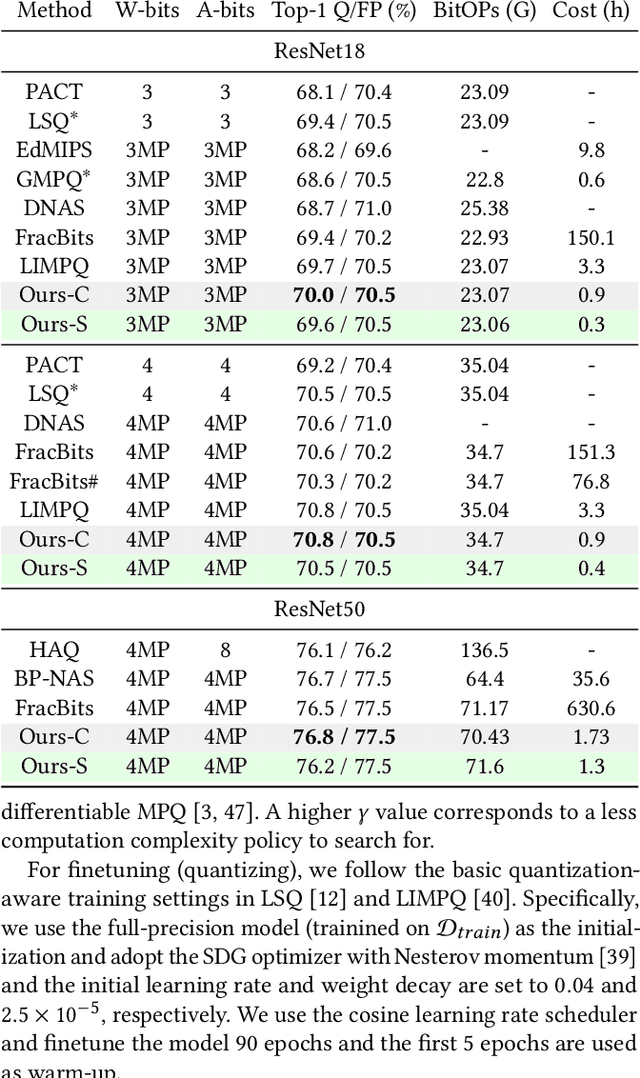

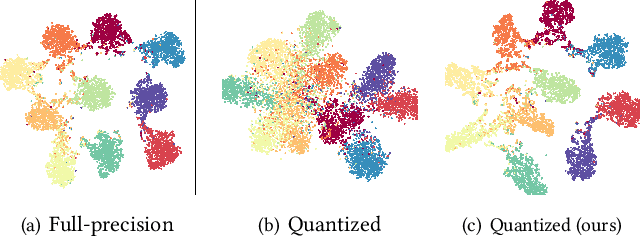

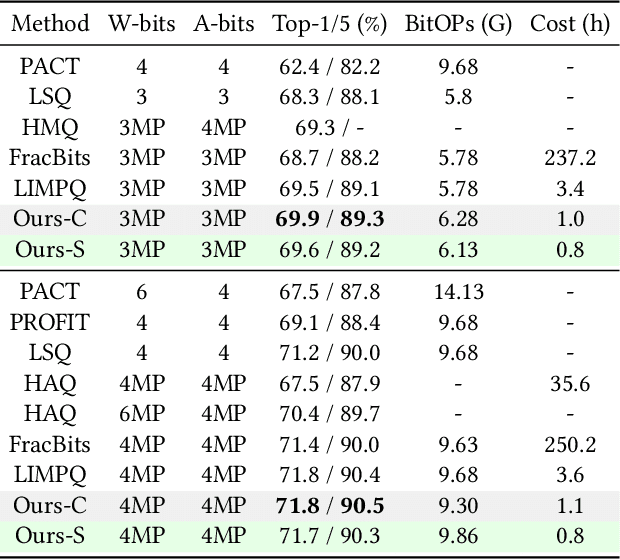

Mixed-precision quantization (MPQ) suffers from time-consuming policy search process (i.e., the bit-width assignment for each layer) on large-scale datasets (e.g., ISLVRC-2012), which heavily limits its practicability in real-world deployment scenarios. In this paper, we propose to search the effective MPQ policy by using a small proxy dataset for the model trained on a large-scale one. It breaks the routine that requires a consistent dataset at model training and MPQ policy search time, which can improve the MPQ searching efficiency significantly. However, the discrepant data distributions bring difficulties in searching for such a transferable MPQ policy. Motivated by the observation that quantization narrows the class margin and blurs the decision boundary, we search the policy that guarantees a general and dataset-independent property: discriminability of feature representations. Namely, we seek the policy that can robustly keep the intra-class compactness and inter-class separation. Our method offers several advantages, i.e., high proxy data utilization, no extra hyper-parameter tuning for approximating the relationship between full-precision and quantized model and high searching efficiency. We search high-quality MPQ policies with the proxy dataset that has only 4% of the data scale compared to the large-scale target dataset, achieving the same accuracy as searching directly on the latter, and improving the MPQ searching efficiency by up to 300 times.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge