SCOBO: Sparsity-Aware Comparison Oracle Based Optimization

Paper and Code

Oct 06, 2020

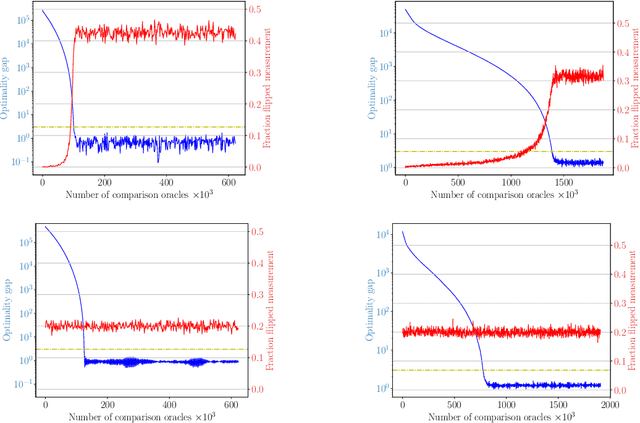

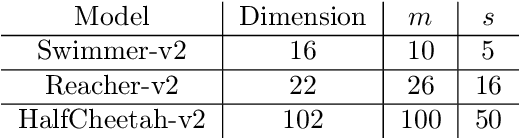

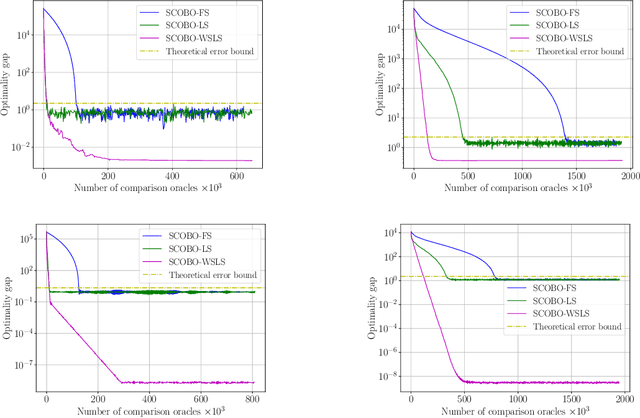

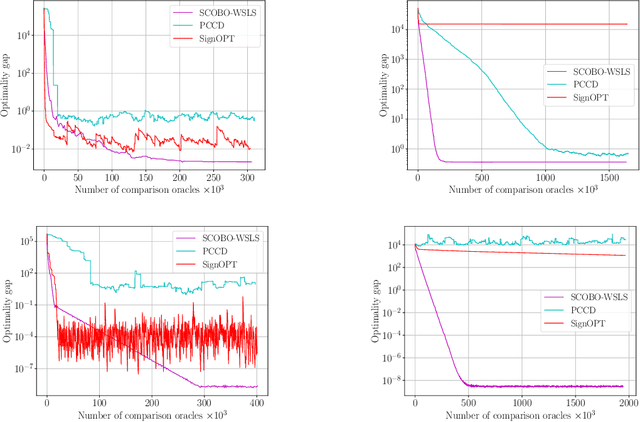

We study derivative-free optimization for convex functions where we further assume that function evaluations are unavailable. Instead, one only has access to a comparison oracle, which, given two points $x$ and $y$, and returns a single bit of information indicating which point has larger function value, $f(x)$ or $f(y)$, with some probability of being incorrect. This probability may be constant or it may depend on $|f(x)-f(y)|$. Previous algorithms for this problem have been hampered by a query complexity which is polynomially dependent on the problem dimension, $d$. We propose a novel algorithm that breaks this dependence: it has query complexity only logarithmically dependent on $d$ if the function in addition has low dimensional structure that can be exploited. Numerical experiments on synthetic data and the MuJoCo dataset show that our algorithm outperforms state-of-the-art methods for comparison based optimization, and is even competitive with other derivative-free algorithms that require explicit function evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge