Scene-Specific Pedestrian Detection Based on Parallel Vision

Paper and Code

Dec 23, 2017

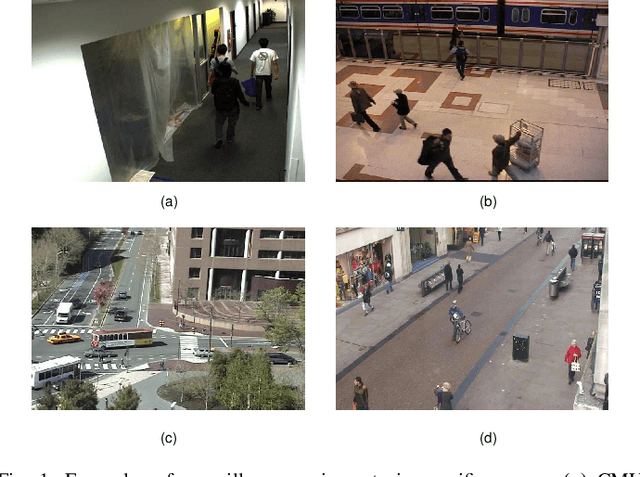

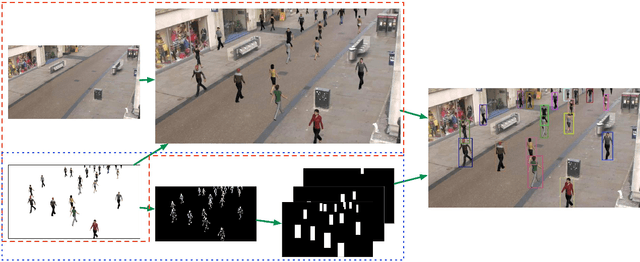

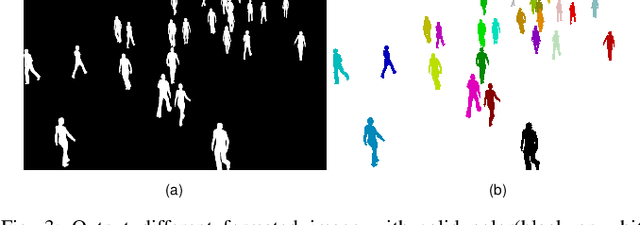

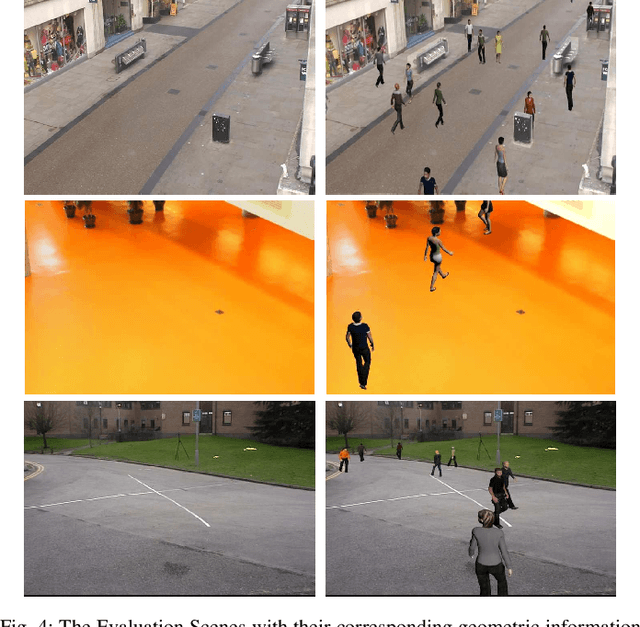

As a special type of object detection, pedestrian detection in generic scenes has made a significant progress trained with large amounts of labeled training data manually. While the models trained with generic dataset work bad when they are directly used in specific scenes. With special viewpoints, flow light and backgrounds, datasets from specific scenes are much different from the datasets from generic scenes. In order to make the generic scene pedestrian detectors work well in specific scenes, the labeled data from specific scenes are needed to adapt the models to the specific scenes. While labeling the data manually spends much time and money, especially for specific scenes, each time with a new specific scene, large amounts of images must be labeled. What's more, the labeling information is not so accurate in the pixels manually and different people make different labeling information. In this paper, we propose an ACP-based method, with augmented reality's help, we build the virtual world of specific scenes, and make people walking in the virtual scenes where it is possible for them to appear to solve this problem of lacking labeled data and the results show that data from virtual world is helpful to adapt generic pedestrian detectors to specific scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge