S-RL Toolbox: Environments, Datasets and Evaluation Metrics for State Representation Learning

Paper and Code

Oct 10, 2018

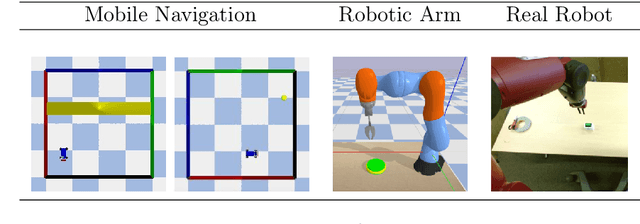

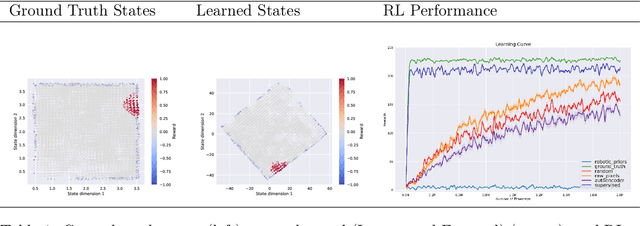

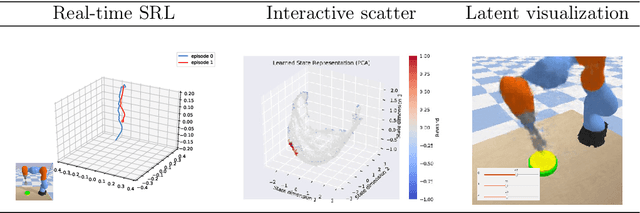

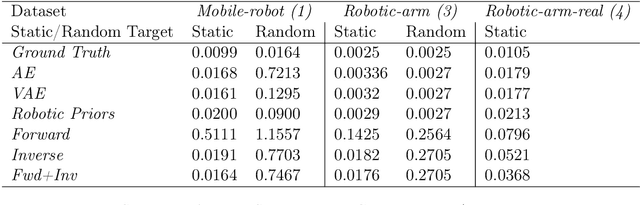

State representation learning aims at learning compact representations from raw observations in robotics and control applications. Approaches used for this objective are auto-encoders, learning forward models, inverse dynamics or learning using generic priors on the state characteristics. However, the diversity in applications and methods makes the field lack standard evaluation datasets, metrics and tasks. This paper provides a set of environments, data generators, robotic control tasks, metrics and tools to facilitate iterative state representation learning and evaluation in reinforcement learning settings.

* Github repo: https://github.com/araffin/robotics-rl-srl

Documentation: https://s-rl-toolbox.readthedocs.io/en/latest/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge