Robustness Implies Fairness in Causal Algorithmic Recourse

Paper and Code

Feb 11, 2023

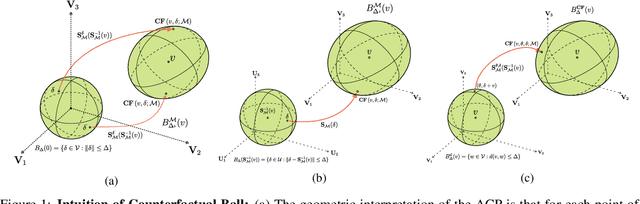

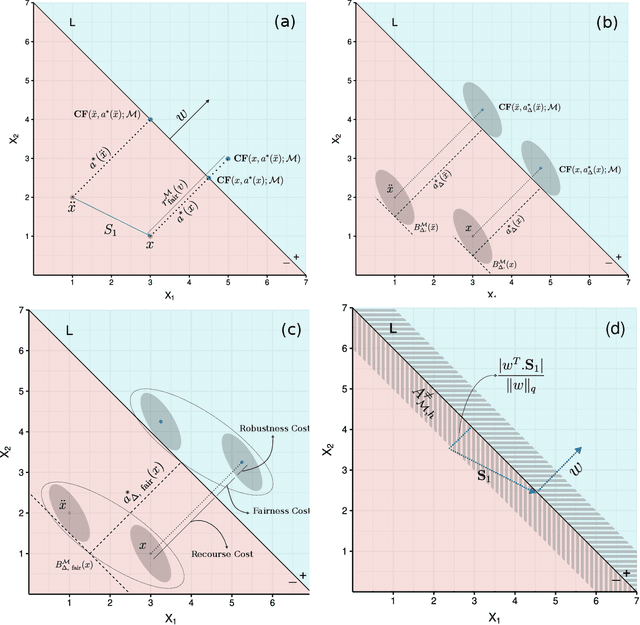

Algorithmic recourse aims to disclose the inner workings of the black-box decision process in situations where decisions have significant consequences, by providing recommendations to empower beneficiaries to achieve a more favorable outcome. To ensure an effective remedy, suggested interventions must not only be low-cost but also robust and fair. This goal is accomplished by providing similar explanations to individuals who are alike. This study explores the concept of individual fairness and adversarial robustness in causal algorithmic recourse and addresses the challenge of achieving both. To resolve the challenges, we propose a new framework for defining adversarially robust recourse. The new setting views the protected feature as a pseudometric and demonstrates that individual fairness is a special case of adversarial robustness. Finally, we introduce the fair robust recourse problem to achieve both desirable properties and show how it can be satisfied both theoretically and empirically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge