Robust MCMC Sampling with Non-Gaussian and Hierarchical Priors in High Dimensions

Paper and Code

Mar 09, 2018

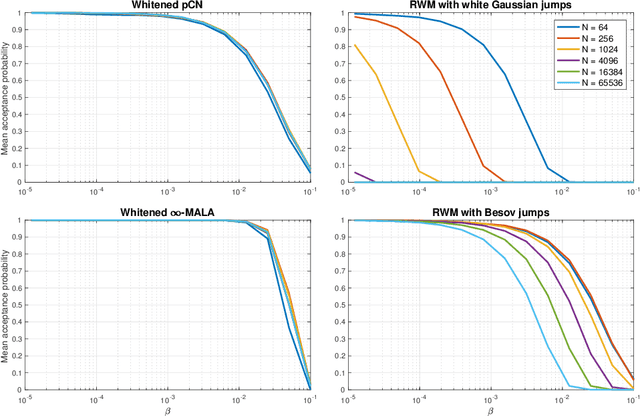

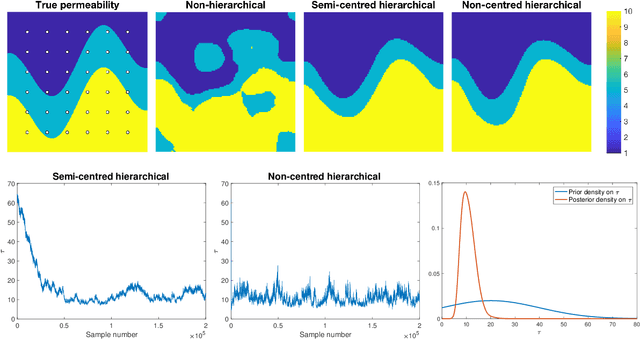

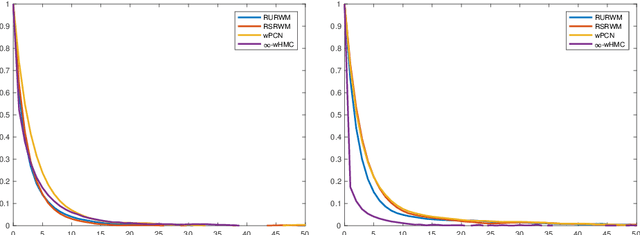

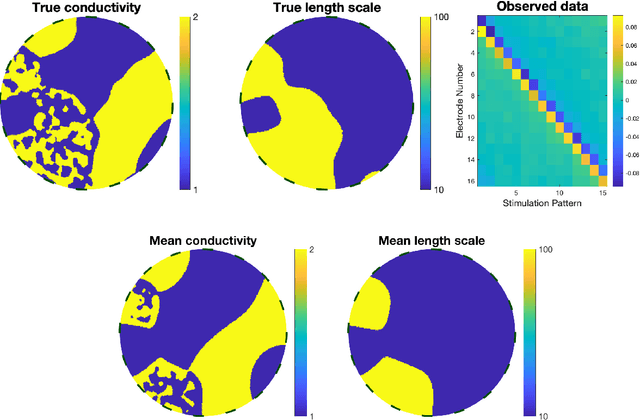

A key problem in inference for high dimensional unknowns is the design of sampling algorithms whose performance scales favourably with the dimension of the unknown. A typical setting in which these problems arise is the area of Bayesian inverse problems. In such problems, which include graph-based learning, nonparametric regression and PDE-based inversion, the unknown can be viewed as an infinite-dimensional parameter (such as a function) that has been discretised. This results in a high-dimensional space for inference. Here we study robustness of an MCMC algorithm for posterior inference; this refers to MCMC convergence rates that do not deteriorate as the discretisation becomes finer. When a Gaussian prior is employed there is a known methodology for the design of robust MCMC samplers. However, one often requires more flexibility than a Gaussian prior can provide: hierarchical models are used to enable inference of parameters underlying a Gaussian prior; or non-Gaussian priors, such as Besov, are employed to induce sparse MAP estimators; or deep Gaussian priors are used to represent other non-Gaussian phenomena; and piecewise constant functions, which are necessarily non-Gaussian, are required for classification problems. The purpose of this article is to show that the simulation technology available for Gaussian priors can be exported to such non-Gaussian priors. The underlying methodology is based on a white noise representation of the unknown. This is exploited both for robust posterior sampling and for joint inference of the function and parameters involved in the specification of its prior, in which case our framework borrows strength from the well-developed non-centred methodology for Bayesian hierarchical models. The desired robustness of the proposed sampling algorithms is supported by some theory and by extensive numerical evidence from several challenging problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge