Robust Federated Learning with Connectivity Failures: A Semi-Decentralized Framework with Collaborative Relaying

Paper and Code

Feb 24, 2022

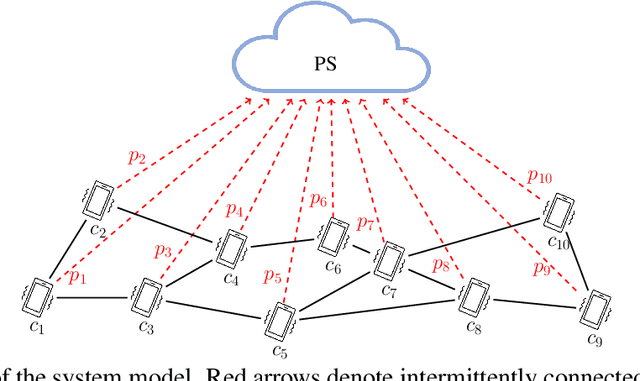

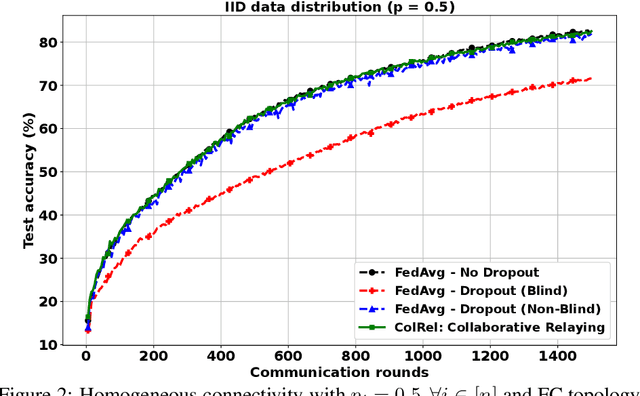

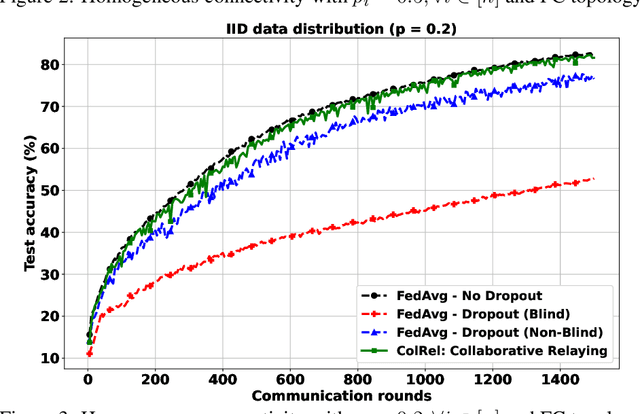

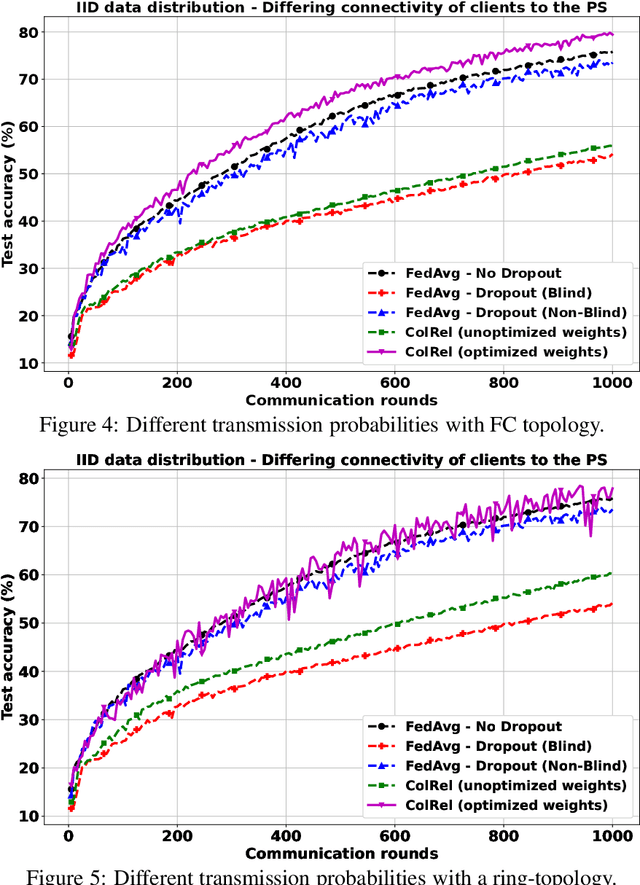

Intermittent client connectivity is one of the major challenges in centralized federated edge learning frameworks. Intermittently failing uplinks to the central parameter server (PS) can induce a large generalization gap in performance especially when the data distribution among the clients exhibits heterogeneity. In this work, to mitigate communication blockages between clients and the central PS, we introduce the concept of knowledge relaying wherein the successfully participating clients collaborate in relaying their neighbors' local updates to a central parameter server (PS) in order to boost the participation of clients with intermittently failing connectivity. We propose a collaborative relaying based semi-decentralized federated edge learning framework where at every communication round each client first computes a local consensus of the updates from its neighboring clients and eventually transmits a weighted average of its own update and those of its neighbors to the PS. We appropriately optimize these averaging weights to reduce the variance of the global update at the PS while ensuring that the global update is unbiased, consequently improving the convergence rate. Finally, by conducting experiments on CIFAR-10 dataset we validate our theoretical results and demonstrate that our proposed scheme is superior to Federated averaging benchmark especially when data distribution among clients is non-iid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge