Robust Actor-Critic Contextual Bandit for Mobile Health (mHealth) Interventions

Paper and Code

Feb 27, 2018

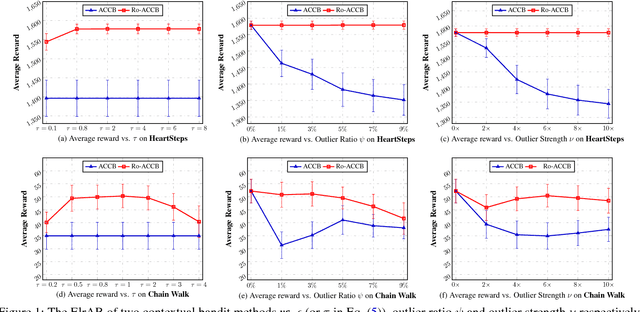

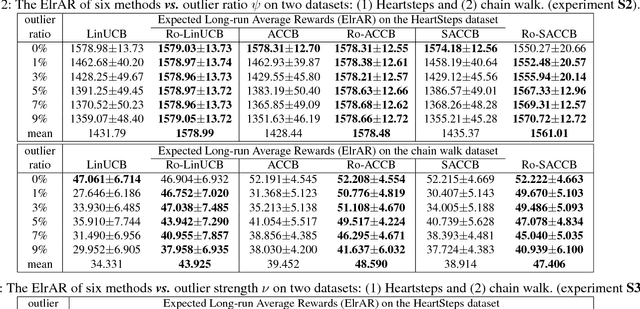

We consider the actor-critic contextual bandit for the mobile health (mHealth) intervention. State-of-the-art decision-making algorithms generally ignore the outliers in the dataset. In this paper, we propose a novel robust contextual bandit method for the mHealth. It can achieve the conflicting goal of reducing the influence of outliers while seeking for a similar solution compared with the state-of-the-art contextual bandit methods on the datasets without outliers. Such performance relies on two technologies: (1) the capped-$\ell_{2}$ norm; (2) a reliable method to set the thresholding hyper-parameter, which is inspired by one of the most fundamental techniques in the statistics. Although the model is non-convex and non-differentiable, we propose an effective reweighted algorithm and provide solid theoretical analyses. We prove that the proposed algorithm can find sufficiently decreasing points after each iteration and finally converges after a finite number of iterations. Extensive experiment results on two datasets demonstrate that our method can achieve almost identical results compared with state-of-the-art contextual bandit methods on the dataset without outliers, and significantly outperform those state-of-the-art methods on the badly noised dataset with outliers in a variety of parameter settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge